Content:

SeaDataNet is

a major operational infrastructure for managing, indexing and providing access

to ocean and marine data sets and data products, acquired by European

organisations from research cruises and other observational activities in

European coastal marine waters, regional seas and the global ocean. It develops,

governs and promotes common standards for metadata and data formats, controlled

vocabularies, quality flags, and software tools and services for marine data

management, which are widely adopted and used for improving FAIRness (Findable,

Accessible, Interoperable and Reusable). SeaDataNet core partners are the

National Oceanographic Data Centres (NODCs) and major marine research

institutes in Europe. It has established a large European and international

network, working closely together with operational oceanography, marine

research, and marine environmental monitoring communities as well as with other

major marine data management infrastructures. SeaDataNet is a major partner in

the development and operation of the European Marine Observation and Data

network (EMODnet),

aimed at supporting the EU initiatives on Marine Knowledge 2020 and Blue Growth

and the Marine Strategy Framework Directive (MSFD). SeaDataNet has a close

cooperation and MoU with Copernicus Marine Environmental Monitoring Service (in

particular CMEMS-INSTAC). Since

the mid-1990s, SeaDataNet has expanded and matured and currently provides

federated discovery and access to more than 110 data centres for physics,

chemistry, geology, bathymetry, and biology. As part of the EU HORIZON 2020 SeaDataCloud project, SeaDataNet is

further developing its discovery, access, ingestion, publishing and

visualisation services as well as its widely adopted SeaDataNet

standards. The project aims at upgrading

and expanding the SeaDataNet architecture and services, making use of cloud

services, taking into account requirements from major stakeholders, such as

EMODnet, CMEMS, EuroGOOS, EU Directives such as MSFD, MSP, and INSPIRE,

international cooperations, such as ICES, IOC-IODE, GOOS, GEOSS, and the European Open Science Cloud (EOSC)

challenge. The SeaDataCloud project is implemented in a partnership with EUDAT,

a leading European network of academic computing centres, who are well involved

in the EOSC developments. The major objectives of the SeaDataCloud project are:

- Improve discovery and access services for

users and data providers

- Optimise connecting data providers and their

data centres and data streams to the infrastructure

- Improve interoperability with other

European and international networks to provide users overview and access

to additional data sources

- Develop a Virtual Research Environment

with tools for analyzing data and generating and publishing data products

- Development,

update and publication of data products for European sea regions.

This is the fourth edition of the newsletter in the

framework of the SeaDataCloud project. It gives you information about the progress

of a number of SeaDataCloud developments such as the launch of the upgraded CDI

data discovery and access service, the near finalization of the prototype

SeaDataNet Virtual Research Environment, the delivery of the SeaDataNet SWE

Toolkit for managing operational oceanographic data streams from input to

storage to distribution and visualization, how SeaDataNet is contributing to

the Ocean Standards & Best Practices initiative of IOC-IODE, and other

topics. We hope you will enjoy this newsletter and will be triggered to visit

the SeaDataNet portal for a try-out of its services and to follow its

evolution. We aim to reach as many people as possible, so please forward it to

anyone you know may be interested.

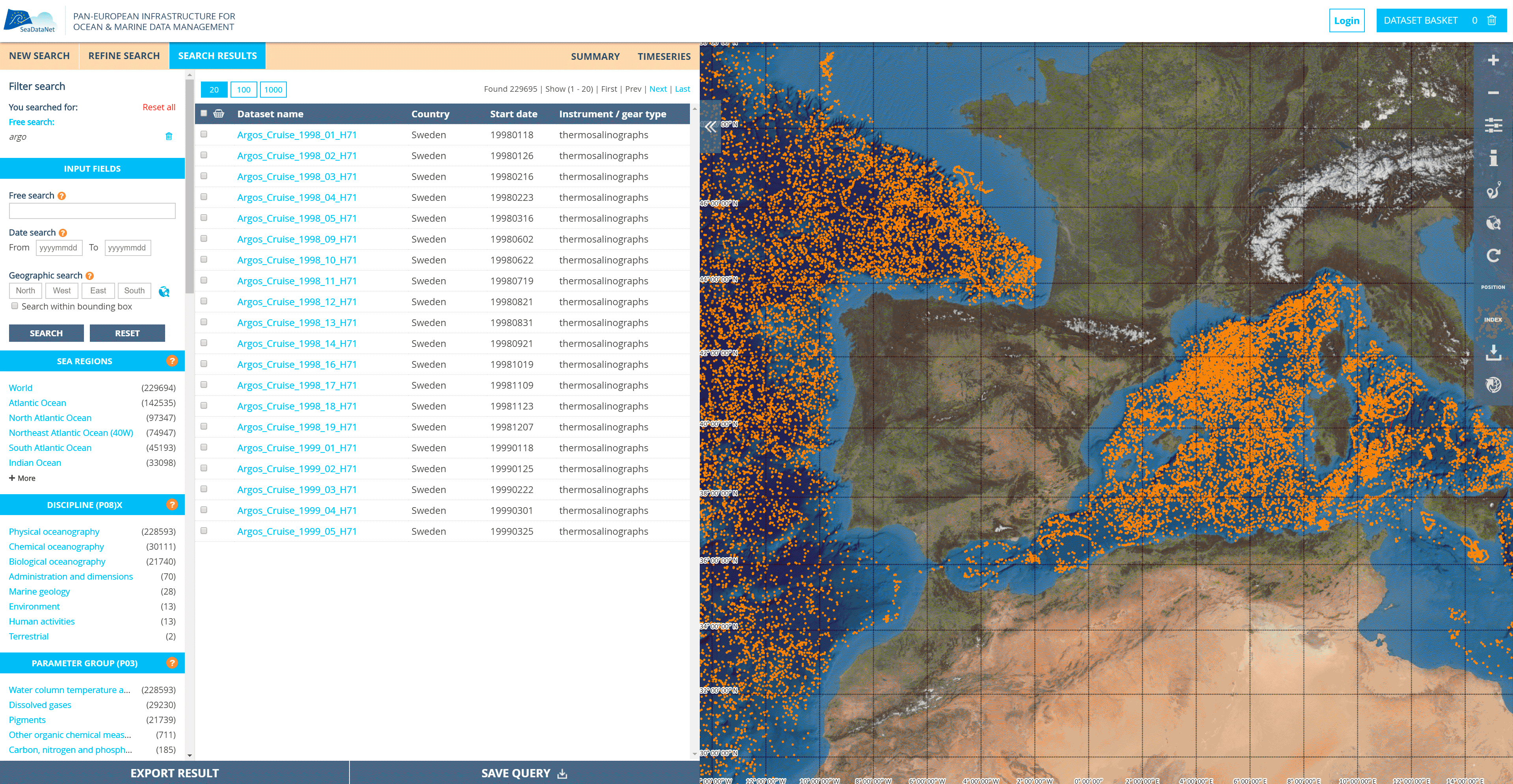

A major focus of the SeaDataCloud

project has been directed towards upgrading the SeaDataNet Common Data Index (CDI) service

for

discovery and access to a wealth of marine and ocean data. In October 2019,

the joint developments of the SeaDataNet network of oceanographic data centres and

EUDAT, a leading European network of academic computing centres, have resulted in the launch of a new and innovative

version of the CDI service. It replaces the previous version which has been in

operation since 2015, while the ur-version was already released in 2007.

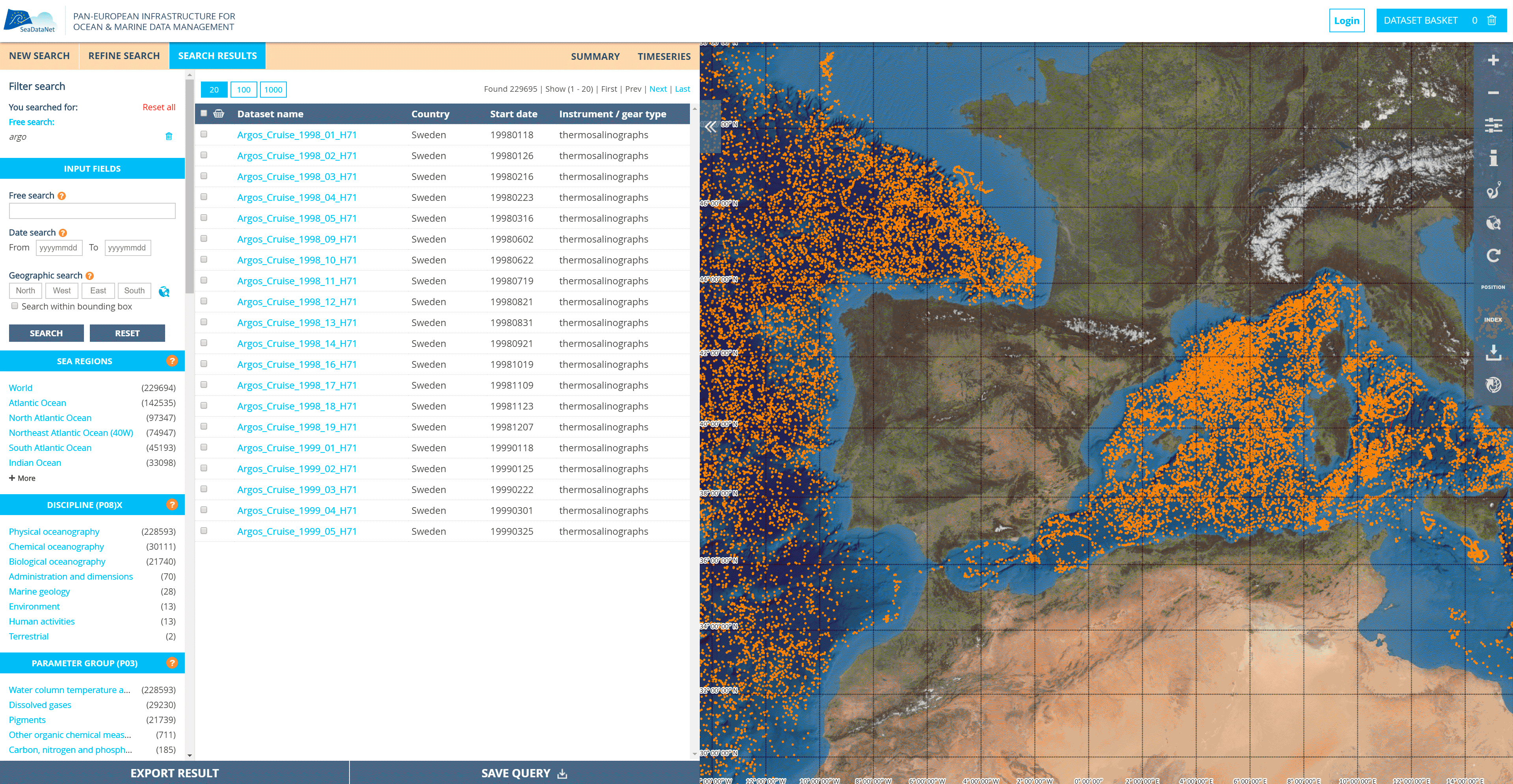

Image:

New dynamic user interface of the upgraded CDI data discovery and access

service, giving discovery and access to more than 2.3 million data sets from 110

data centres

Architecture:

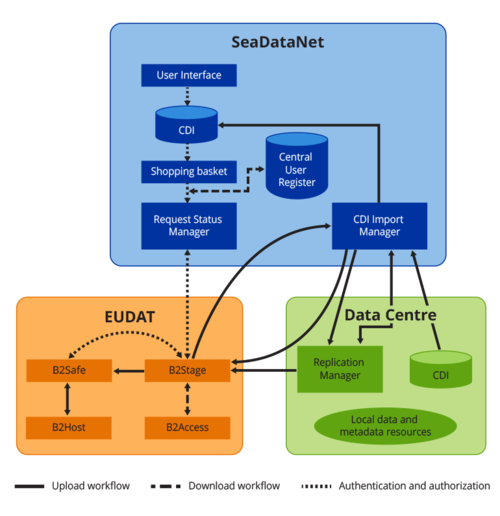

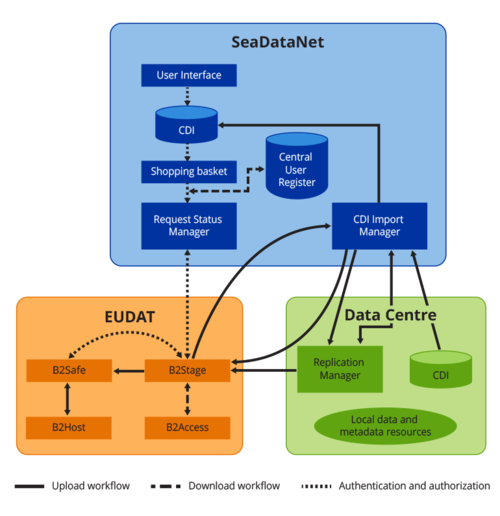

The

new architecture of the CDI service makes a distinction between the front-end

with discovery, shopping and downloading of data sets by users, and the back-end

for importing new and updated CDI metadata and related data entries (including

versioning) by data centres. The separation is achieved by introducing a

central data cloud, which holds copies of all unrestricted data sets by

replication from the connected data centres, and which serves as a central data

cache for efficiently executing user shopping requests.

New CDI service components

-

Local software tools at data centres to

prepare metadata and data files in SeaDataNet formats and using SeaDataNet

controlled vocabularies, from local data resources

-

Replication Manager at data centres for

importing CDI metadata to Central CDI catalogue and associated data

files to Data Cache cloud, orchestrated by the CDI Import Manager

-

Data Cache cloud with adapted EUDAT

services for import, storing, and downloading

-

Upgraded CDI User Interface and facilities

for shopping and tracking of shopping requests, by users and data centres

Table: Architecture of upgraded CDI service

GUI

and MySeaDataNet:

The interface is intuitive, but in case of questions an on-line help is available.

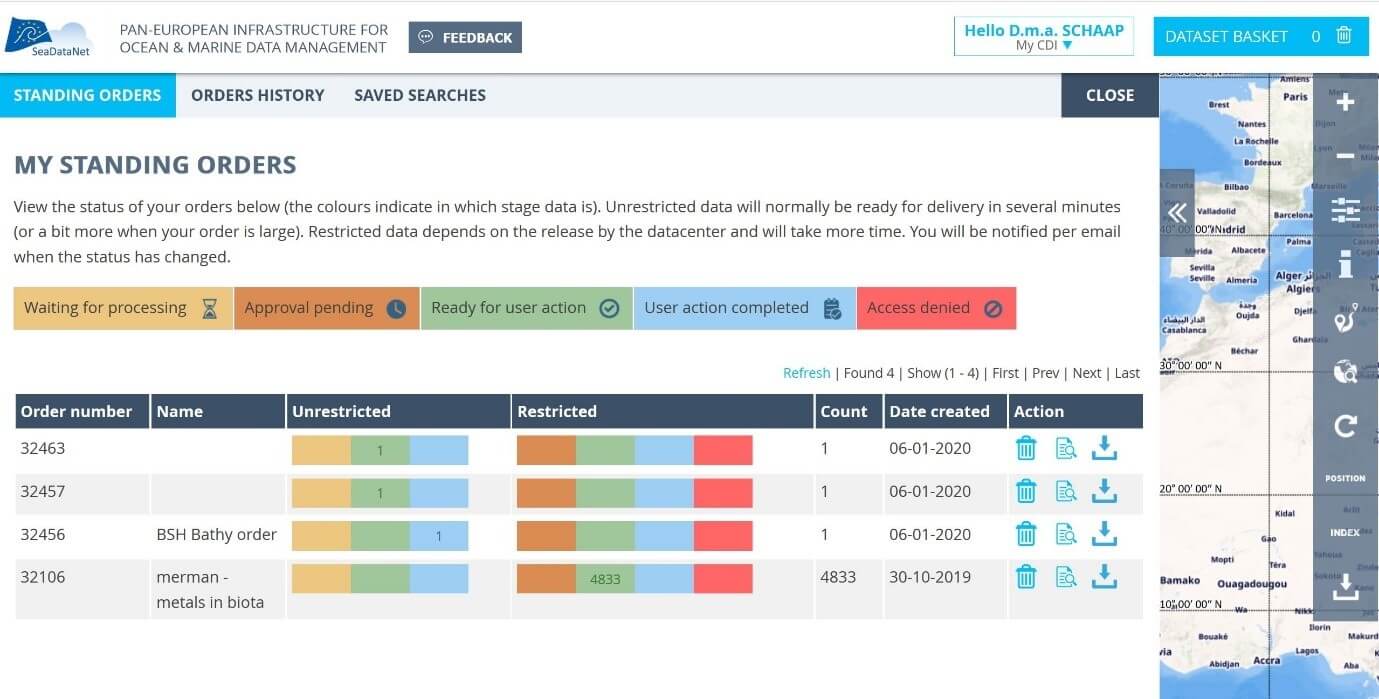

The

Graphical User Interface gives users powerful search options by combining free

search, facet search and geographic search options, powered by Elastic Search,

SQL search, and Geo Server. The data access function comprises a simple and

effective data shopping, tracking and download service mechanism. All

functions for both users and data providers can be reached from a new

MySeaDataNet dashboard, depending on the Marine-ID and associated registered

functions and roles. As part of this, the shopping process now has an integrated

dialogue instead of having to go to separate applications and URLs, for example

for searching, registering, checking shopping progress, and retrieving data

sets. This makes the dialogues for users and providers much more efficient, and

easy to understand and perform. Furthermore, several processes and

functionalities have been reviewed and optimised, including performances, which

again is in favour of the users and data providers.

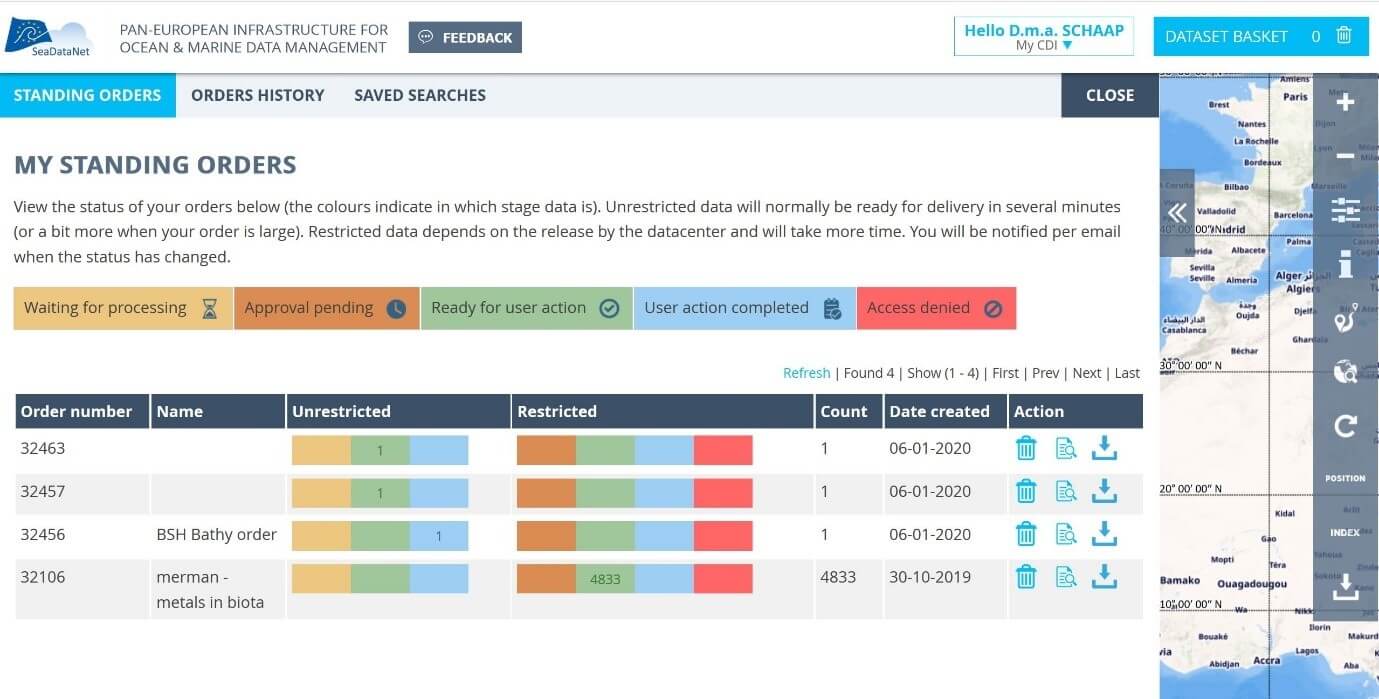

Image: Example screen of the shopping

dialogue of the upgraded CDI service

For data centres, the MySeaDataNet environment gives access

to an online CDI Import Manager service with a dashboard to manage imports of

new and updated CDI and data entries. And it gives data centres the option to

evaluate and process shopping requests for restricted data sets, which are

managed locally and not in the central data cache. The import of metadata and

unrestricted data as well as the delivery of restricted data sets, once agreed,

are structured as workflows and more self-service oriented as in the current

situation. These services work together with the Replication Manager for

efficient automatic processing. The Replication Manager replaces the Download

Manager, and is easier to install and configure if done by technicians who are

well aware of their local infrastructure. Alternatively, the semi-automatic

‘interim solution' is maintained for data centres that are not allowed to

install a local component. However, extra functionality is added to the CDI

Import Manager for the CDI support desk to mediate for ‘interim' data centres

to maintain the CDI catalogue, now both with unrestricted and restricted data

sets. This gives ‘interim' data centres the option to relax their data policies

and to provide unrestricted data sets. While moving from interim to a situation

with Replication Manager is an option which adds to further streamlining the

processes.

SeaDataNet role and position in the

European marine data landscape:

The CDI service is instrumental for the position and

importance of SeaDataNet in the marine and ocean domain. SeaDataNet plays a leading

role in the European marine data management landscape, next to and in

cooperation with other leading European infrastructures, such as European Marine Observation and Data network (EMODnet)

and Copernicus Marine Environmental Monitoring Service (CMEMS). The SeaDataNet network of National Oceanographic

Data Centres (NODCs) and data focal points provides essential connections and

functionality at national levels in Europe to the many originators of marine

observation and analysis data. The more than 100 SeaDataNet national data

centre nodes manage, store, quality control, curate, and distribute ocean and marine

data sets, for physics, chemistry, geology, biology, and geophysics. The

original data are collected during research cruises, national monitoring

programs, scientific projects, and other observational activities in European

coastal marine waters, regional seas and the global ocean. The originators are more

than 750 organisations, such as marine research institutes, monitoring

agencies, geological surveys, hydrographic services, universities, and private

sector, who use the SeaDataNet data centre nodes for long-term archival and

wide distribution.

The SeaDataNet CDI Data Discovery and

Access service gives harmonized discovery and access to this large and

ever-increasing volume of marine and ocean data sets as handled and managed at

the SeaDataNet data centre nodes. The archived and curated data, increasingly

are major input for developing added-value services and products that serve

users from government, research and industry. Major products are developed by

EMODnet lots, CMEMS, and SeaDataNet itself. For instance, EMODnet Chemistry

delivers harmonized and validated aggregated data collections for

eutrophication, and contaminants for all European sea basins which serve the

Marine Strategy Framework Directive (MSFD) and are taken up by Regional sea

Conventions, EU DG-Environment and European Environment Agency (EEA) for

supporting assessments and deriving indicators. The data as harmonised and

bundled by SeaDataNet provides the initial basis and facilitates regular

updating of those EMODnet products as new data are becoming available. On its

turn, EMODnet stimulates more originators of data, and more data centres to

join the SeaDataNet network of data centres and the CDI service for including

their original observation and analysis data sets, this way expanding and

strengthening the basis under the products and improving their quality. CMEMS

deploys pan-European capacity for Ocean Monitoring and Forecasting. SeaDataNet

and CMEMS have an MOU in place for mutual exchanges of data, adoption by CMEMS

of SeaDataNet standards, and for developing joint products such as

climatologies. Next to serving organised communities, the SeaDataNet CDI

service also serves individual users, mostly from research sectors. This is

further stimulated, inter alia through cooperation with the evolving European

Open Science Cloud (EOSC).

Optimising the robustness and performance of the new CDI service:

During the SeaDataCloud developments, major attention was given to the

fine-tuning and further integration of the individual system components,

deploying various use cases, including upscaling of numbers and volumes of

imported and retrieved data files, and monitoring the behaviour and functioning

of the processes aiming for operational stability and ruling out interruptions

and failures. During the

tests, performances were administered and tuning was undertaken to bring

performances to an acceptable level. The tuning incorporated arranging more

computing resources, such as more CPU's, workers, and memories, as well as

making processes faster and more efficient. Also, a lot of activity was undertaken for fine-tuning and completing

interfaces and communications for and to data providers and end-users. A lot of

interaction was undertaken by organizing two sessions of training for data managers and technicians on

common standards, data management procedures, and installing and using upgraded

system components and tools. The first training workshop gave

technicians and data managers of data centres a preview of the new CDI user

interface allowing data centres to test a beta version and to provide feedback

about their findings and suggestions. This feedback was great input for further

developing and fine-tuning the new CDI user interface. The second training

workshop gave data centres more details and hands-on training with the further

developed import procedure and how to deploy the Replication Manager at their

data centres in order to migrate from the old CDI system with Download Manager

to the new CDI system configuration. This gave insights for improving

installation and configuration instructions and manuals of software components.

This second training workshop was also an important event as part of the

process for wider deployment of the new CDI service at each of the more than

100 CDI data centres. During this migration process of several months further

feedbacks were provided about bugs and topics for improvement, leading to new

releases of the Replication Manager software and upgrading of the services,

operated by SeaDataNet partners and EUDAT. Moreover, the system for operational monitoring of the

upgraded components of the CDI service and the new components, in particular on

the EUDAT platform, needed to be upgraded.

Different environments have been set-up for development, test

and production. This applies to the components as given above for the

SeaDataNet and EUDAT platforms, while for Data Centres no development environment

is included, but only test and production. This separation in environments

makes it possible to undertake additional developments, which are moved to the

operational test environment for integrated testing, followed by moving to

operational production. The test environment at Data Centres serves to install,

configure, and test new versions of the Replication Manager, while the old

stays in production till it can be replaced.

Future developments:

Further upgrading of the CDI service is

undertaken in synergy with the ENVRI-FAIR project. This is in particular aiming

at improving the FAIRness of the service, both by enriching metadata and by

optimizing machine-to-machine services. For this, SeaDataNet is introducing

SPARQL endpoints for all its European directories and common vocabularies,

adopting common patterns, following the Linked Data principles. SPARQL is a W3C standard and the query

language used to query Resource Data Framework (RDF) data. Linked Data

representations of the various SeaDataCloud catalogues will allow ease of

interoperability at a global scale. In addition, using these principles and

services, the CDI metadata can be enriched automatically by making use of

additional metadata from the linked SeaDataNet directories and possible other

external sources, such as DOI landing pages for scientific papers. This will be

deployed for enriching the discovery and detail pages, while also a CDI API is

under development, making full use of the Linked Data model.

Try it out

yourself and customer survey:

You are invited

to try out the upgraded service yourself, starting at the CDI service landing page .

Discovery and browsing of metadata are

public, while a one-time user registration is required for submitting data

requests. If you do, we also ask you to complete a short survey, which you can

find with a button in the top bar. The survey results will help us to understand how well

the service is working and what might need to be improved in the future.

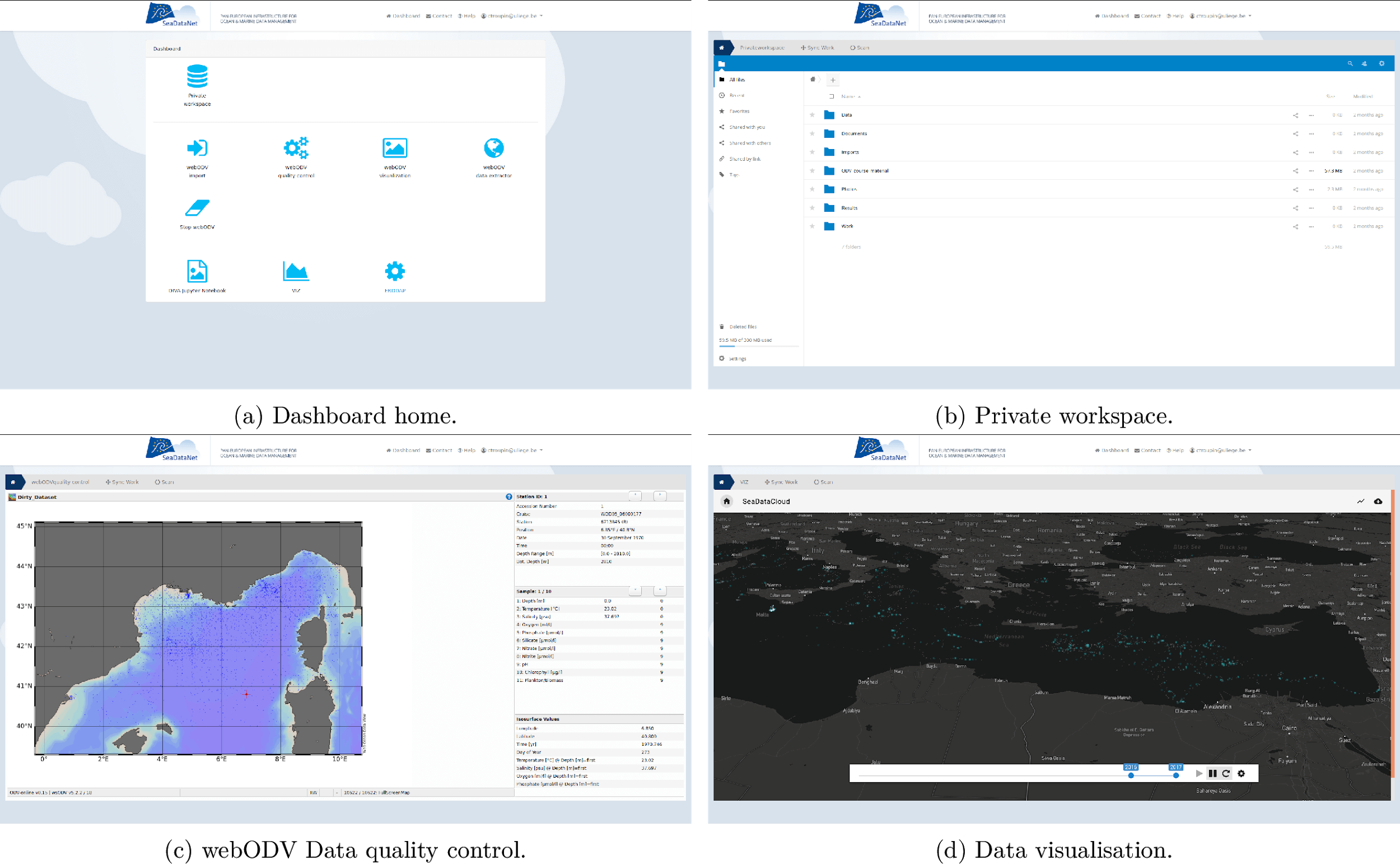

For researchers, the conventional work using

their desktop computer to analyse data and generate products is getting more

and more tedious due to the increasing amount of data to process and the

complexity of the procedures. Three main hurdles often prevent them from

performing the required operations:

- Insufficient

CPU power, leading to excessively long computation times

- Lack

of available memory to process large datasets

- Insufficient

disk storage, making the use of the full datasets impossible.

To overcome these, cloud services are becoming

common practice, such as the EUDAT European Research Data Infrastructure or the European Open Science Cloud

(EOSC). With

such services, no more data downloading is needed: all the processing is

performed close to the data. In

addition, the cloud allows research on a common platform, with a guarantee that

the latest versions of the software tools are installed, and enhances the

reproducibility of the scientific work. In order to gain experience with

web-based science and to provide the oceanographic community with seamless

access to SeaDataNet data and software tools, the SeaDataNet Virtual

Research Environment (VRE) prototype has been developed and

deployed.

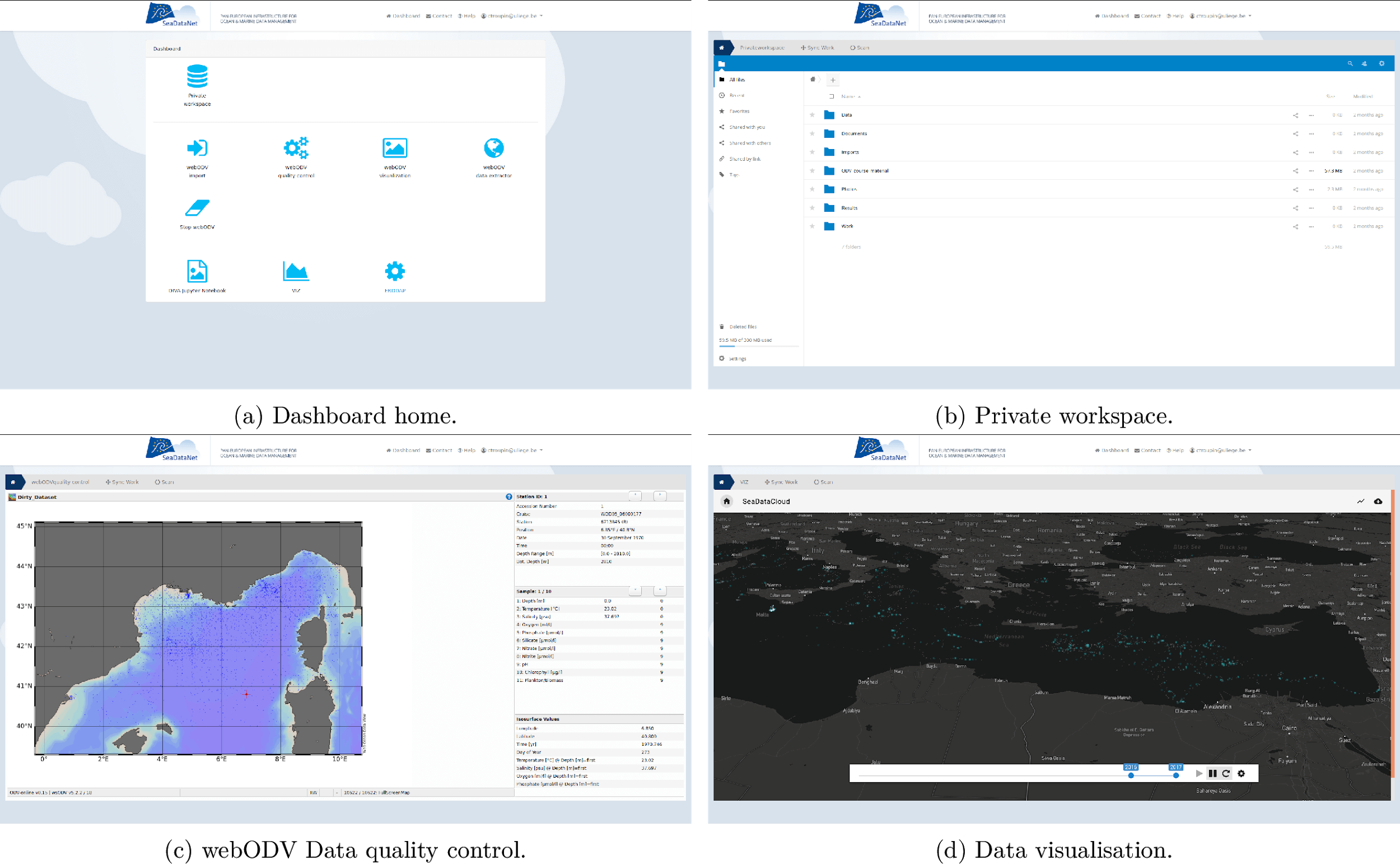

SeaDataNet VRE tools overview:

Users with an account can login to the VRE with

their Marine-ID, the

SeaDataNet identity provider, and then enter a dashboard with access to a

private workspace as well as the different services which are described

hereinafter.

webODV consists of a suite of online services based on the Ocean

Data View (ODV) software, designed to interactively perform

analysis, exploration and visualization of ocean data. webODV allows users to

aggregate large numbers of SeaDataNet data files and perform quality control. DIVAnd

(Data Interpolating Variational Analysis in n dimensions) is a cutting-edge software tool designed to

efficiently interpolate in-situ observations onto a regular grid, in an

arbitrary number of dimensions (for instance longitude, depth and time). A set

of Jupyter notebooks

provides a guideline to the user on how to prepare the data, optimise the

analysis parameters and perform the interpolation. BioQC is a tool to process and to run quality control on biological

datasets. BioQC helps researchers to evaluate whether a particular biological

occurrence record within the input file is useful for their analysis. It also

helps the data providers to identify possible gaps and errors in their datasets.

The tool returns the input file with quality information attached for each

occurrence record and a detailed report. This result file will enable the users

to filter for suitable records. VIZ

is a modern and dynamic visualisation service to explore datasets on a map. By

clicking on data points, the users see a plot of the full profile prepared with

WebODV, and metadata of the input ODV files. A time selector makes it possible

to limit the data for the period of interest. Additionally, the visualisation

service provides the possibility to explore 4D gridded products prepared with

DIVAnd.

The Subsetting

service based on Erddap strives to make data access easier, by providing

services to subset, download and plot data. It returns datasets in various data

formats such as CSV, MATLAB, netCDF, ODV and more. An interactive visualization

tool allows users to discover and browse through the subset results with modern

web technologies.

Image: Different components of the VRE.

How is the Virtual

Research Environment deployed?

The VRE's components are deployed on different

servers across various EUDAT data centres to distribute the load. The shared

central components as well as the various processing services are deployed as Docker containers. These provide a standardized computing

environment across all data centres and significantly facilitate the

development of the VRE. The services are loosely coupled: the VRE provides

central services such as Single Sign-On and central web storage for users, but

the services can also run as standalone services. This allows one to easily

extend the architecture and integrate heterogeneous services.

The dashboard is the heart of the VRE.

Besides providing the main user interface, it is responsible for managing

diverse aspects of the VRE. It provides the user interface with access to the

different services and to the user's private workspace, an online storage space

for users to store their own datasets and the results of their analysis.

Additionally, the dashboard performs some tasks behind the scenes: it is

responsible for triggering data synchronisation mechanisms and ensures user

authorization for the various services by a token-based authorization system. The dashboard is a web application based on the PHP framework Laravel, which

includes a user management system and implements state-of-the-art security

measures. The authentication/ authorization protocol OAuth is used to provide

Single-Sign-On authentication with Marine-ID, based on EUDAT's B2ACCESS, an

authentication service used by several European projects and initiatives. The private workspace is an instance of

the well-known NextCloud tool, an open source software for cloud-based

collaboration. It is customized for the SeaDataNet VRE and runs on its hardware.

The file selector is a bridge

between most VRE services and the user's private workspace, allowing users to

select data from their private workspace to be handed over to the service

instances by the back end. Its front end is based on hummingbird-treeview, a

JavaScript tool for displaying lists or file/folder structures as hierarchical

trees. Services can customize the treeview to their needs.

Two types of

processing services:

The actual processing tools - the services - are what researchers use to perform their analyses. Two types of

services are deployed in the VRE. Some services operate like traditional web

servers, where one central instance serves all users' requests. The BioQC tool, the VIZ-ualisation

tool, and webODV are examples for this type. For these

services, one Docker Container is running continuously and waiting for users,

and the work of different users is kept separate using http sessions. This is

the first type of service.

The second type of service is for the more

computation intensive tools or those whose backing software is not optimized

for separating multiple user's sessions. The latter frequently occurs when porting

software that was developed for desktop machines to the cloud-based mode. This

type of service requires one instance for every user. As services are packaged

as Docker containers, this means that one docker container per user is deployed.

JupyterHub is used to spawn the containers upon the user's request. JupyterHub

is initially developed to serve Jupyter notebooks, but - slightly diverting

from its intended use - it can be used for any tool that is packaged as Docker

Container and interacts with the user via HTTP. JupyterHub's benefits include

instance management, authentication/authorization, and solid web security

measures such as reverse proxying and SSL termination. This solution is used

for the services DIVAnd, which is actually run as Jupyter notebooks, and ERDDAP

subsetting service.

DIVAnd tool is provided

to users through a Docker image that includes the installation of

the Julia language (currently version 1.4.0) along with packages such as PyPlot (plotting

library), NCDatasets (manipulation

of netCDF files); required libraries and tools (e.g. netCDF, unzip, git), the

master version of DIVAnd.jl and the

master version of the DIVAnd notebooks.

The VRE's subsetting service embeds an instance

of Erddap, configured dynamically and started on the

fly according to a dataset selected by the user. The service provides a

consistent way to create and download subsets of scientific datasets in netCDF

format. The subsetting service's Docker image includes the installation of Java

and Python for the web services and a web server to run applications (Tomcat),

the Erddap web application, a visualization frontend using VueJS framework,

plus some further required libraries and tools (e.g. netCDF).

Future perspective

In the final year the SeaDataNet VRE prototype will

be further tested by user communities to better suit their needs and to fix

performance issues. The VRE prototype will also be brought to operational level

and actively monitored. After that it will be released under the SeaDataNet website for wider user tests with sufficient

instruction about the tools the user can expect, and with a request to provide

feedback and suggestions for improvement. These suggestions and feedback will

be used as a basis for further development in future projects.

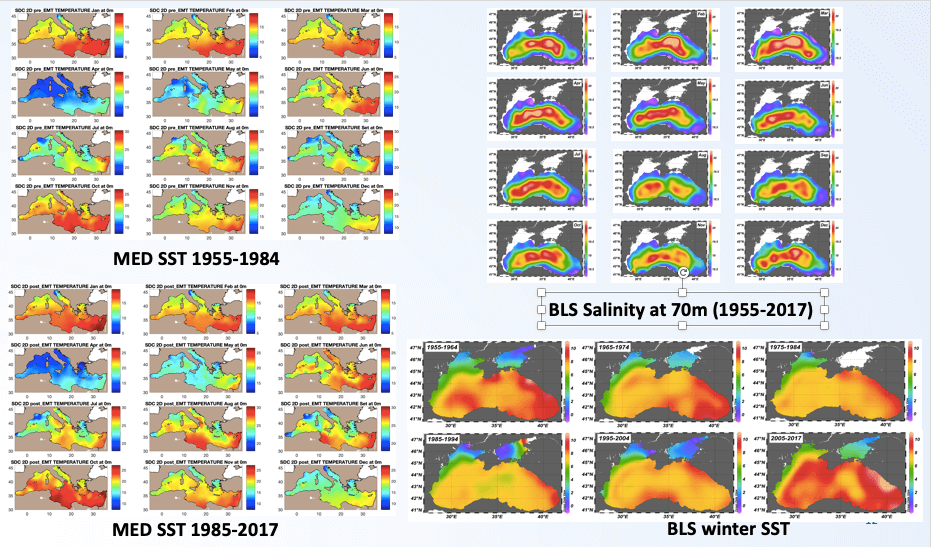

In

the framework of the SeaDataCloud project, the WP11 team is mainly dedicated to

generating data products such as temperature and salinity data collections and

climatologies for the EU marginal seas (Mediterranean, Black Sea, North

Atlantic, Baltic Sea, North Sea, Arctic Ocean) and the global ocean. The goal

is to provide the best data products from SeaDataNet at regional and

global scale and serve diverse user communities (operational oceanography,

climate, marine environment, institutional, academia). The regional aggregated

data sets for all EU marginal seas contain all temperature and salinity data

harvested from the SeaDataNet infrastructure. The data sets are then validated and

elaborated by regional experts. The resulting datasets are used to produce the

climatologies, gridded fields obtained through DIVAnd mapping tool and

representing the climate of the ocean at regional and global scale. In addition

to these standard products, new data products are planned by the end of the

project, in which the WP11 team will explore a multi-platform and

multi-disciplinary approach combining in situ (e.g. gliders, Argo, ships,

drifters, fixed platforms) and remote sensed observations, Ocean Monitoring

Indicators for tracking ocean mechanisms and/or climate mode's and trends. The

WP11 team is making full use of the data sets as available in SeaDataNet and of

many of the services and tools which are provided by the SeaDataNet

infrastructure for discovery, access, validation, quality control,

visualization, interpolation, and gridding of retrieved data sets. This way,

the WP11 team is contributing to verifying the quality of the SeaDataNet

infrastructure content and services. As such it is also involved in the

SeaDataNet VRE development as test team.

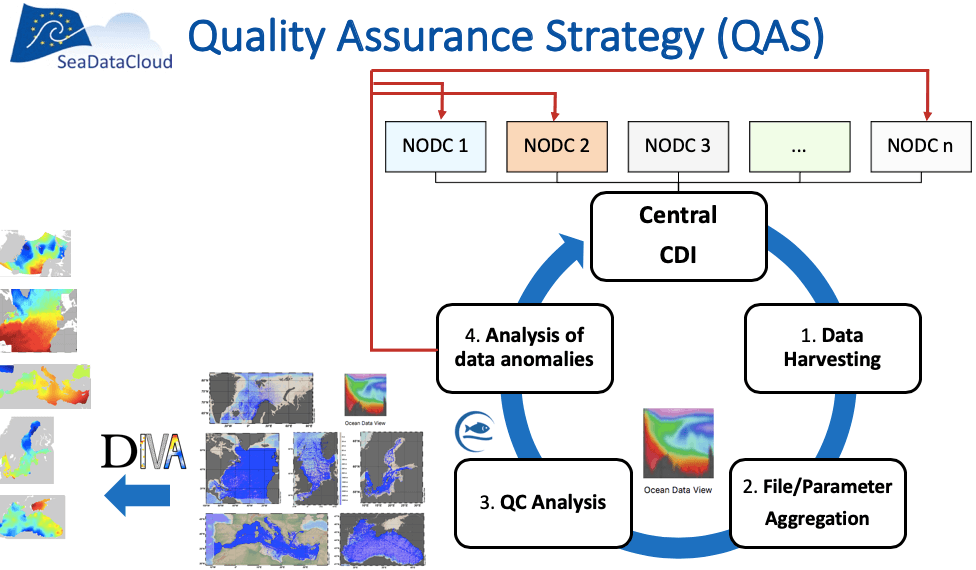

Quality Assurance Strategy:

The SeaDataNet partnership has worked

jointly to implement and progressively refine a unique Quality Assurance

Strategy (QAS), shown in the image below, aimed at continuously improving the

quality of the database content and creating the best data products. The QAS

consists of four main phases:

-

all data and metadata are harvested

from the central Common Data Index (CDI) service, using an internal buffer

system, configured for specific subsettings;

- file and parameters are aggregated to

generate a metadata enriched ODV (Ocean Data View) collection;

- Quality Check (QC) analysis by

regional experts on the regional ODV collections further validates (secondary

QC) the data and the Quality Flags assigned by data providers (primary QC);

- analysis and correction of the

detected data anomalies from NODCs (National Oceanographic Data Centers), which

finally updates the corrected data within the infrastructure. The approach is

iterative to continuously upgrade the quality of database content and data

products.

Phases

2 and 3 rely on ODV

software

for the aggregation and quality check of data further developing the the

guidelines introduced within SeaDataNet projects. The WP11 team provides also

recommendations for the development of ODV software and its webODV version. The

phase 3 output consists of validated aggregated data sets for all EU marginal

seas in ODV collection format. These data sets are used to compute temperature

and salinity climatologies through the DIVAnd software tool which allows to

spatially interpolate observations on a regular grid in an optimal way. WP11

team contributes also to the DIVAnd software by an intense testing and

debugging activity.

Image: SeaDataCloud Quality Assurance Strategy.

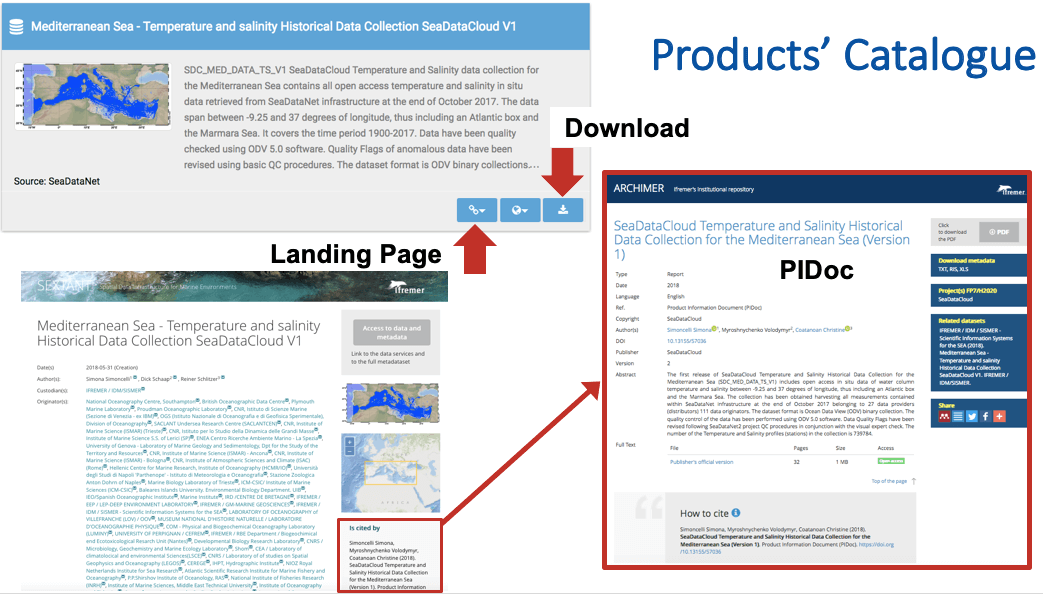

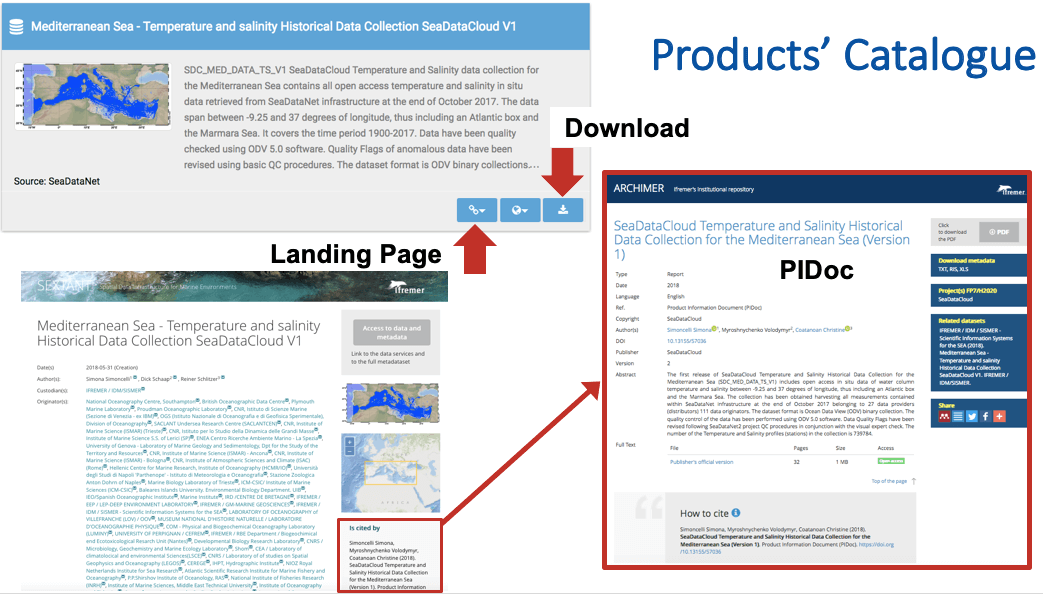

The first version of the aggregated and validated temperature

and salinity data collections (SDC_DATA_TS_V1)

has been

released in June 2018 and they are accessible through the SeaDataNet products catalog

together with their Product Information Document (PIDoc) containing all

specifications about product's generation, quality assessment and technical

details to increase the user's confidence and facilitate products uptake.

Digital Object Identifiers (DOI) are assigned to products and relative PIDocs

following the linking data approach to foster transparency of the production

chain and acknowledge all actors involved from data originators to product

generators. An example, the Mediterranean Sea - Temperature and salinity

Historical Data Collection and its PIDoc, is displayed below.

Image: How to access SeaDataCloud products and the relative

Product Information Document (PIDoc) from the SeaDataNet products catalogue

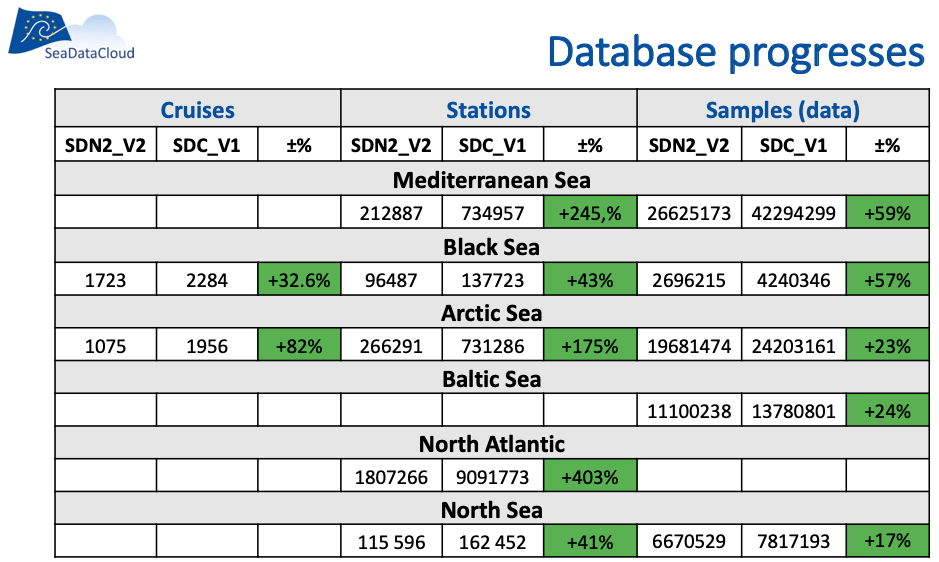

The main

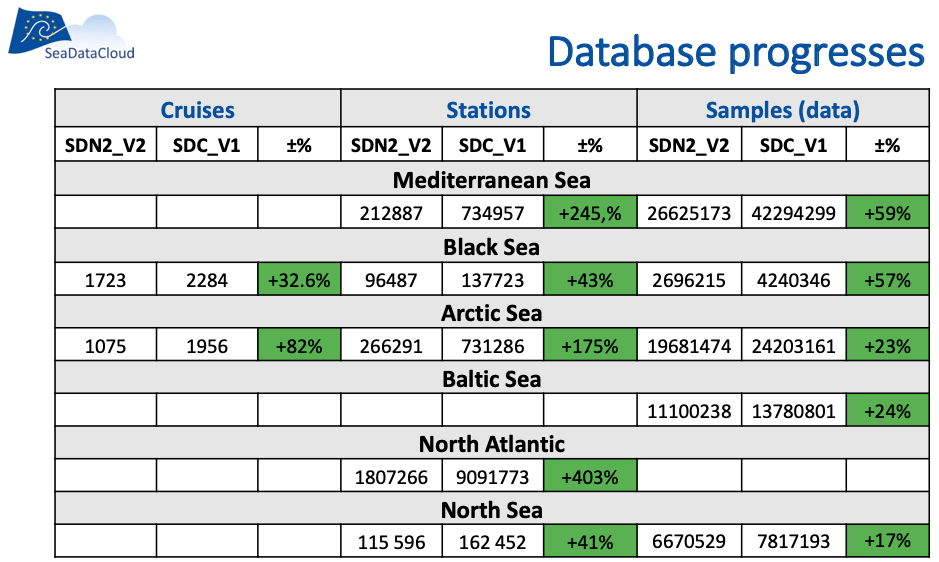

outcome from the analysis of the SDC_DATA_TS_V1

regional collections were:

-

Data

population statistics per sea basin showed a progressive increase of available data

(see next image)

-

Data

quality also improved thanks to the introduction of additional checks by

regional experts

-

QF statistics

after QC present very high percentages of good data

-

New metadata statistics about data distributors/originators highlighted

some systematic

(format, flagging) errors but also made possible to include a fair acknowledgment to all

data providers.

-

New instrument type statistics made possible to

detect some data and

metadata omissions within

the SeaDataNet.

Image: Summary

statistics of SeaDataNet database progresses from SeaDataNet2 to SeaDataCloud

in terms of number of cruises, stations and measurements per sea basin.

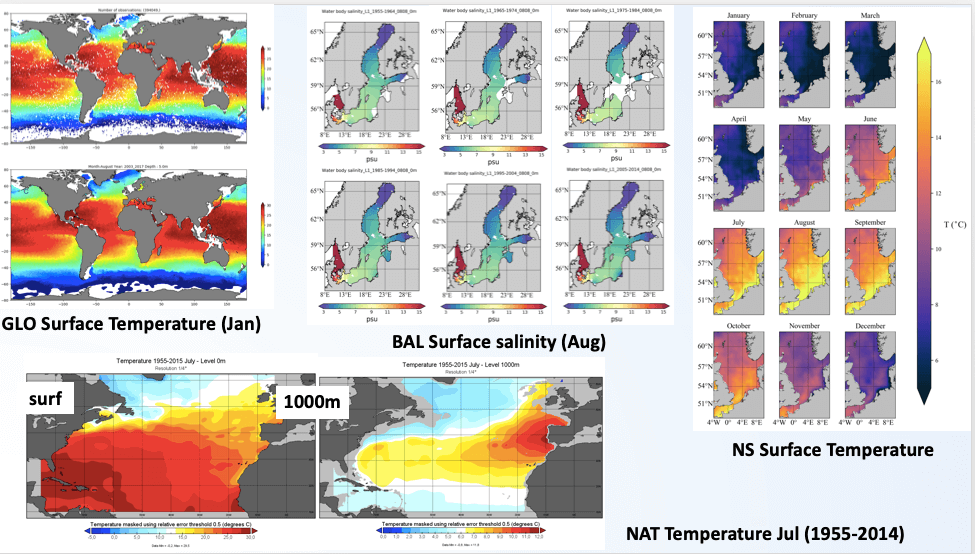

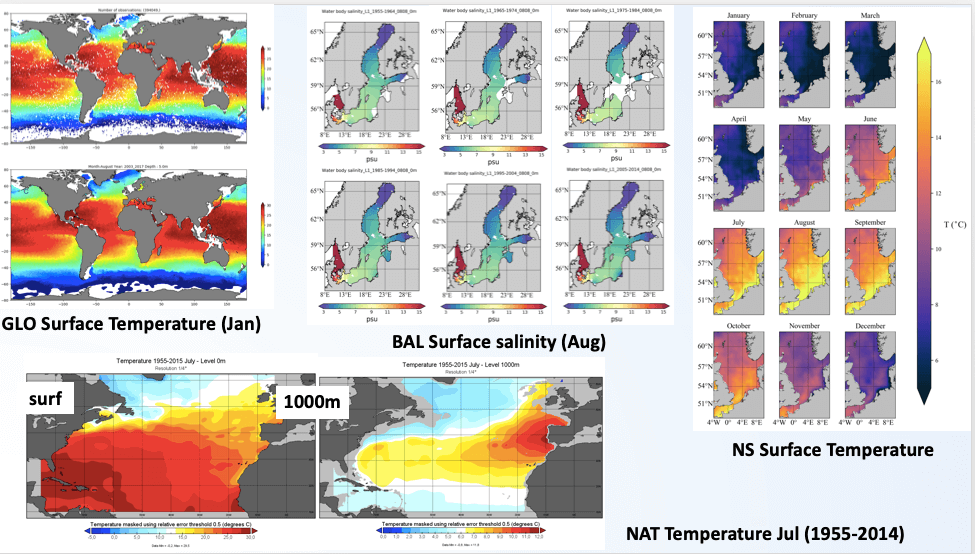

The

first version of temperature and salinity climatologies (SDC_CLIM_TS_V1) have

been released in July 2019. The regional

climatologies were designed with a harmonized initial approach, all covering

the time period after 1955 when marine data started to be sufficient for

mapping at regional scale and adopted the World Ocean Atlas 2018 vertical

standard levels. All regional products are characterized by a monthly

climatological field covering the entire time span 1955-2014 at least (some of

them reach 2017) and decadal climatologies at seasonal temporal resolution

(monthly for the Baltic region) over six decades (1955-1964, 1965-1974,

1975-1984, 1985-1994, 1995-2004, 2005-2017). Moreover, all of them have been

created integrating, for the first time, SDC_DATA_TS_V1 aggregated datasets

(both restricted and unrestricted) with external sources (World Ocean Database

and Coriolis Ocean Dataset for Reanalysis), which highly increased the data

coverage.

Also

a global climatology (SDC_GLO_CLIM_TS_V1) has been created for the first time

which contains two different monthly climatologies for temperature and

salinity, SDC_GLO_CLIM_TS_V1_1 (1900-2017) and SDC_GLO_CLIM_TS_V1_2 (2003-2017)

with a different time coverage, computed from data from the World Ocean

Database (WOD2013). This choice has been taken because spatial coverage of

SeaDataNet data at global scale is still too sparse.

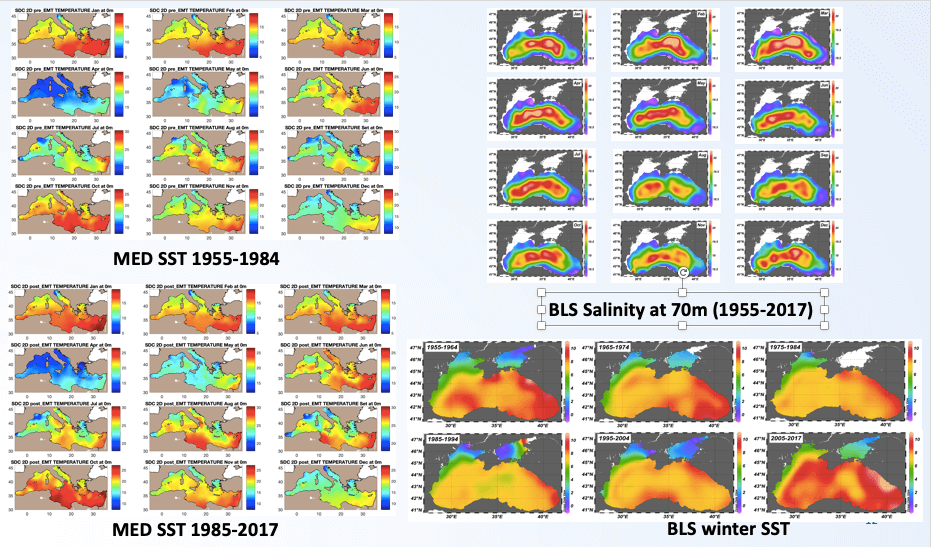

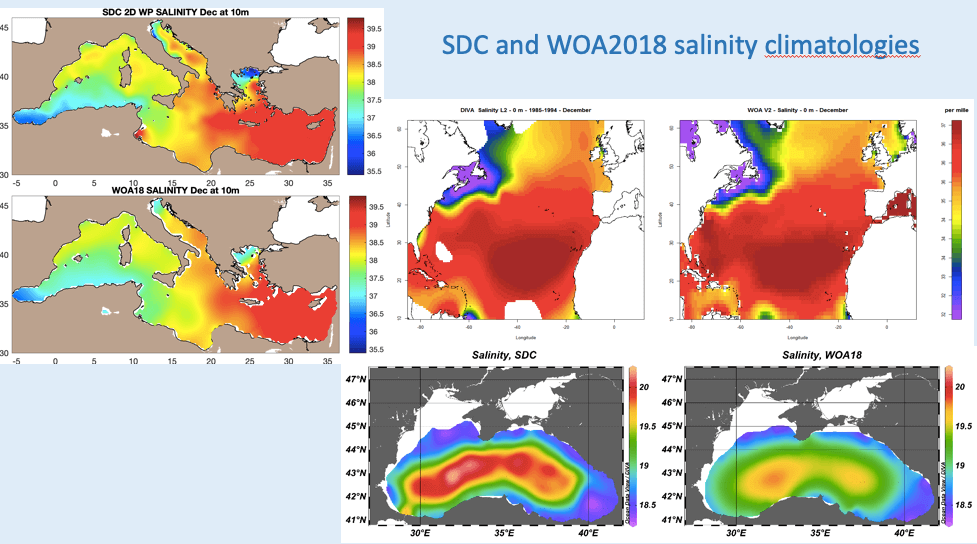

The

following image contains example plots of all the SDC_CLIM_TS_V1 climatologies,

extracted from the relative Product Information Documents (PIDocs).

Image: Example plots

of the SDC_CLIM_TS_V1 climatologies.

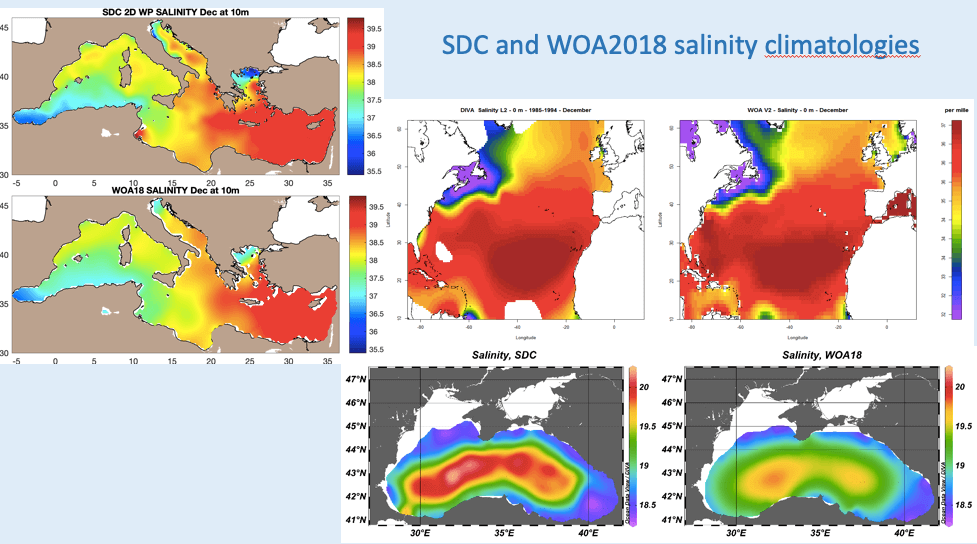

A

consistency analysis of all SeaDataCloud climatologies versus the World Ocean Atlas 2018 has been performed to demonstrate the differences and

the added value of SeaDataNet data products. Both qualitative and quantitative

analysis suggested a good consistency of SDN products with WOA2018 and

highlighted the added value of regional products compared to the global WOA,

having a lowest spatial resolution. Some issues have been identified by

regional leaders on the quality of their products and have been reported in the

PIDoc section named "Product Usability". Some

examples plots from the PIDocs are given below.

Figure: Example

plots of the consistency analysis performed SDC_CLIM_TS_V1 climatologies.

SDC_CLIM_TS_V1 climatologies have been

produced with a big effort of all partners in the WP11 team. Several gridded

fields at different spatial and/or temporal resolution have been generated for

the first time, all presenting increased vertical resolution. Decadal fields

for regional marginal seas have been created for the first time as well.

The major

achievements have been:

- The uptake of the new DIVAnd software,

the team work jointly to debug and improve it;

- The integration of external sources,

which included the analysis on how much the data sets overlap or

complement each other and the detection of data anomalies and duplicates.

The WP11

team identified possible improvements for the second version of the

climatologies which results are expected by the end of the project. Main issues

to be addressed are:

-

The optimization of the production

chain according to the best solutions developed by the WP11 partners;

- The optimization of the data

integration process;

- The standardization of the duplicate

detection process with external data sources.

A

second version of the aggregated datasets (SDC_DATA_TS_V2) is going to be

released very soon in the SeaDataNet products catalogue, while the new

climatologies (SDC_CLIM_TS_V2) will become available by the end of the project.

In 2013, the IODE Programme of

IOC/UNESCO at its 23rd Session through the Recommendation IODE-XXII.6 and in

cooperation with the WMO-IOC Joint Technical Commission for Oceanography and

Marine Meteorology (JCOMM) established the "Ocean Data Standards and Best

Practices" (ODSBP) Project.

The aim of the project was to achieve broad agreement and commitment to adopt a

number of standards and best practices related to ocean data management and

exchange and to facilitate interoperability. The ODSBP Project was the

continuation of the "Ocean Data Standards" (ODS) Pilot Project, also

established and implemented jointly between IODE and JCOMM. The ODSBP Project

actually extended the activities of its predecessor to include dissemination

and promotion of "best practices", in addition to "standards". The

proposed standards through a review process, which include experts and

community review, when accepted, are recommended for wide use and are published

in the IOC Manuals and Guides series (see Recommended Standards.

For

the dissemination of the best practices, in 2014, IODE established a document

repository, the "OceanDataPractices" repository

(ODPr)

(Recommendation IODE-XXII.19). The repository contained a wide variety of

"practices" such as manuals and guides related to oceanographic data and

information management. It was aiming to provide a platform for organizations

to work on common standards and avoid duplication, to allow individual

researchers from all around the world to find and follow practices approved by

specialized expert bodies and organizations, and to enable research groups that

wish to start a new research project and want to prepare a data management plan

to search and find existing methodologies and "best practices". The service was

also intended for users to submit their own documents that they wish to share

with the community. ODPr was a joint effort by several participating institutions,

which include ICES, IOC/IODE, JCOMM, SCOR and WMO.

The

communication and use of "best practices" by the marine community is a

challenging process. There are diverse oceanographic data that address multiple

needs and the way these data are being collected, managed, documented and

distributed may be "best" for some users but these specifications may not be

the best solution for others. The need for a FAIR global and sustainable ocean

best practice management was recognized by several national and international

organizations and projects. The EU AtlantOS Project and its Best Practices

Working Group with the support of ODIP the (Ocean Data Interoperability

Platform) Project and the NFS Ocean Observation Research Coordination Network)

developed the Ocean Best Practice System

(OBPS) concept. The long-term objective of the Ocean Best Practices System

(OBPS) is to provide the ocean research, observing and application communities

with a mechanism to discover, review, agree upon, adopt and support the widest

possible dissemination of ocean best practices. The existing IOC/UNESCO IODE

"OceanDataPractices" repository (ODPr) was identified as a permanent,

sustainable repository and in 2017 its name changed to "OceanBestPractices" repository to reflect the broader spectrum of "all ocean-related" best practices. The new

System development was centered around this repository that is addressing the

best practices management challenges and it is today one of its elements. In

2019, the OBPS was adopted by the IOC as an international project co-sponsored

by the Global Ocean Observing System (GOOS) and the International Ocean Data

and Information Exchange (IODE). The new System includes the following

elements: 1) a permanent repository, the "OceanBestPracticesSystem Repository" (OBPS-R) that

hosted by IODE offering the scientific community a platform to publish their

ocean-related best practices and find practices of others using innovative

search and access technology, 2) a peer review Research Topic Journal in Frontiers in Marine

Science,

3) web-based and in-person Training and Capacity Building capability based on

the IODE OceanTeacher Global Academy, 4) community outreach and engagement

activities to help users integrate OBPS into their routine work.

SeaDataNet contributions:

SeaDataNet is actively contributing

since the early steps of the initiative and international activities for the

development of "standards" and "best practices". In 2014, four SeaDataNet

standards proposals were submitted to the ODSBP Project:

-

SeaDataNet Cruise

Summary Report (CSR) Data Model proposal

-

SeaDataNet Cruise

Summary Report (CSR) XML Encoding proposal

-

SeaDataNet Common

Data Index (CDI) Data Model proposal

-

SeaDataNet Common

Data Index (CDI) XML Encoding proposal

The

scope of the proposals was to acknowledge the SeaDataNet CDI and the Cruise

Summary Reports (CSR) metadata profiles as standard metadata models for the

documentation of Marine & Oceanographic Datasets and Cruise Reports as well

as their XML encoding as the reference XML implementation. In particular, the

proposals were aiming to promote CDI&CSR as regional (i.e. European)

standards.

In 2015, two joint proposals by

SeaDataNet and the ODIP projects were also submitted to the IODE Standards

Process:

-

SeaDataNet

Controlled Vocabularies, and

-

SeaDataNet NetCDF

(CF) data format,

aiming to acknowledge SeaDataNet

Controlled Vocabularies and NetCDF (CF) as standards used in metadata and data

formats descriptions and as data transport model for processing and sharing

Marine and Oceanographic Datasets and promote them as regional (i.e. European)

standards.

The controlled vocabularies

have been adopted and published as a recommended standard and in the IOC Manual and Guides No. 54

Volume

and at the Ocean

Data Standards site.

The review for the CDI, CSR metadata models and XML schema following the

expert comments has been finalized by the developers of the proposals including

the updating of the technical reference documentation. The next step of the

process is the community review.

As the NetCDF (CF) data format

elements were intended for a "regional" rather than a global standard, the

document was submitted as a "best practice" to the "OceanBestPracticesSystem

Repository" (OBPS-R). In 2019, SeaDataNet submitted an additional ten documents

to the repository (including its standards) describing its data management

manuals and protocols and its data and metadata format specifications:

-

Flow Cytometry

data: format and examples of SeaDataNet ODV data and CDI xml metadata files.

-

Guidelines and

forms for gathering marine litter data. [Updated version: 26/03/2019].

-

Ingesting,

validating, long-term storage and access of Flow Cytometer data.

-

Ocean Data

Standards, Vol.4: SeaDataNet Controlled Vocabularies for describing Marine and

Oceanographic Datasets - A joint Proposal by SeaDataNet and ODIP projects.

-

Proposal for

gathering and managing data sets on marine micro-litter on a European scale.

[Updated version: 07/06/2019].

-

Proposal for

gathering and managing data sets on marine micro-litter on a European scale.

[Updated version: 19/04/2019] [Superseded by DOI:

http://dx.doi.org/10.25607/OBP-495].

-

SeaDataNet Cruise

Summary Report (CSR) metadata profile of ISO 19115-2 - XML encoding, Version

4.0.0.

-

SeaDataNet Cruise

Summary Report (CSR) metadata profile, Version 4.0.0.

-

SeaDataNet

metadata profile of ISO 19115, Version 11.0.0.

-

SeaDataNet

metadata profile of ISO 19115-XML encoding, Version 11.0.0.

-

SeaDataNet NetCDF

format definition. Version 1.21.

The SeaDataNet community "best practices" can

be found at the following website address.

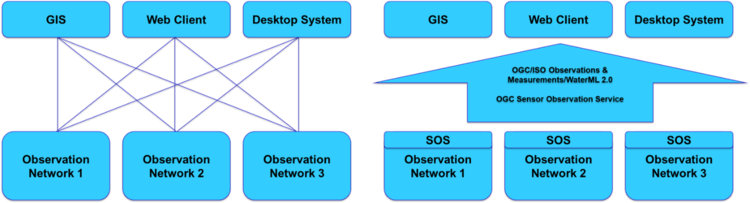

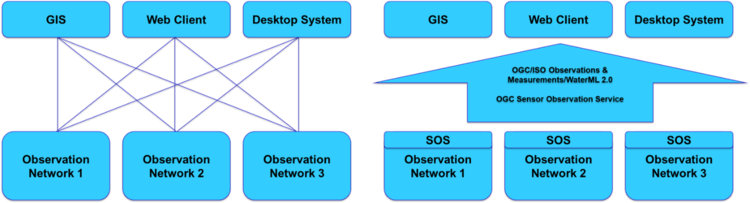

When dealing with (in-situ) observation data, there is a very large

amount of different sensor data encodings, data models as well as interfaces.

This heterogeneity makes the integration of sensor data a very cumbersome task.

For example, without a common standardised approach, it would be necessary to

customise each application that shall consume sensor data to the individual

data formats and interfaces of all sensing devices that will deliver data.

To address this issue, the Open Geospatial Consortium (OGC), an

international de-facto standardisation organisation in the field of spatial

information infrastructures, has developed the Sensor Web Enablement (SWE) framework of standards.

The OGC SWE architecture comprises several specifications facilitating the

sharing of observation data and metadata via the Web. Important building blocks

are standards for observation data models (Observations and Measurements,

O&M), for the corresponding metadata about measurement processes (Sensor

Model Language, SensorML), and interfaces for providing sensor-related

functionality (e.g. data access) via the World Wide Web (Sensor Observation

Service, SOS).

Image: Left: no standards used, requiring

individual integrating of all data sources; Right: reducing the integration efforts by using

SWE standards, common

interfaces and encodings

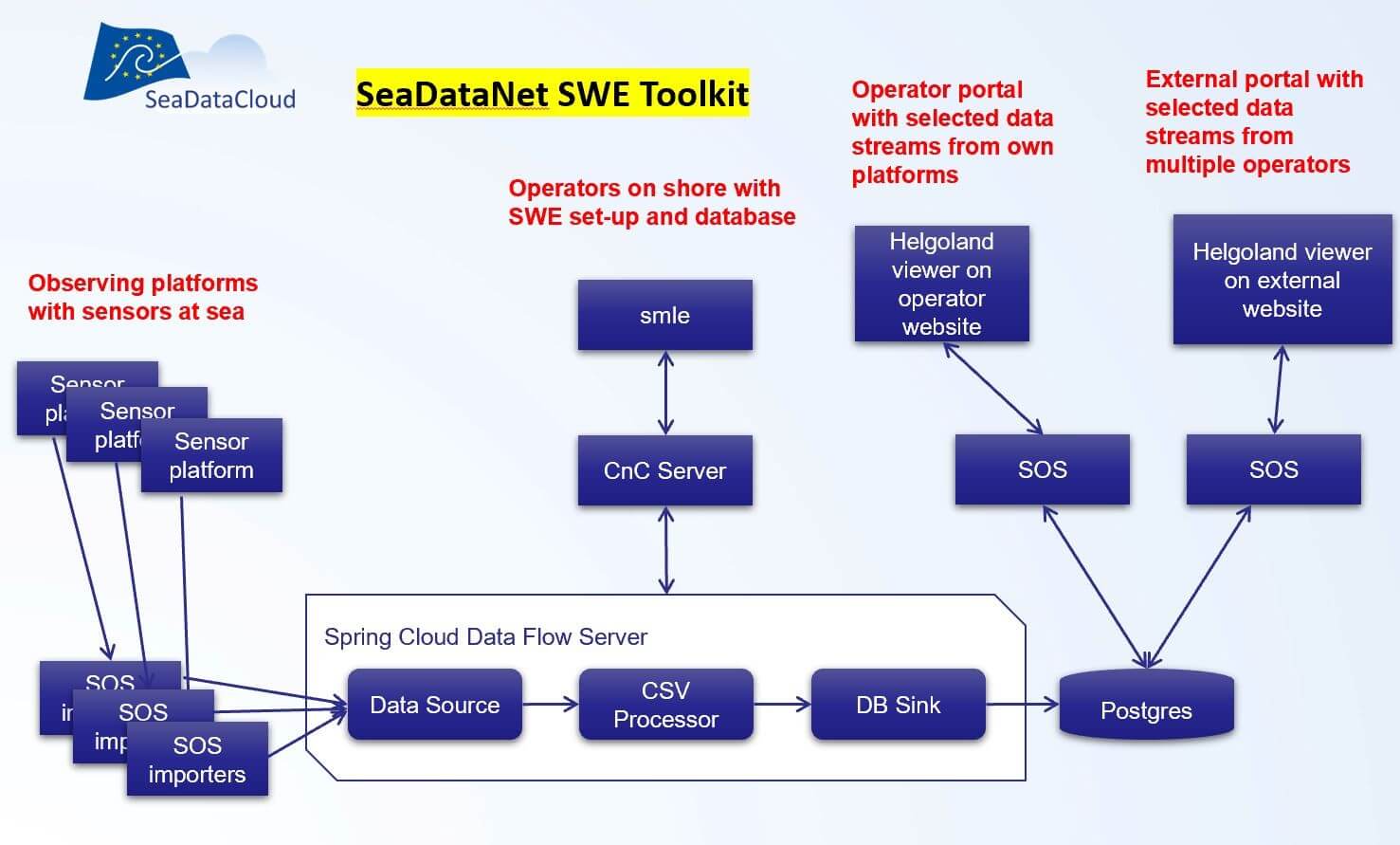

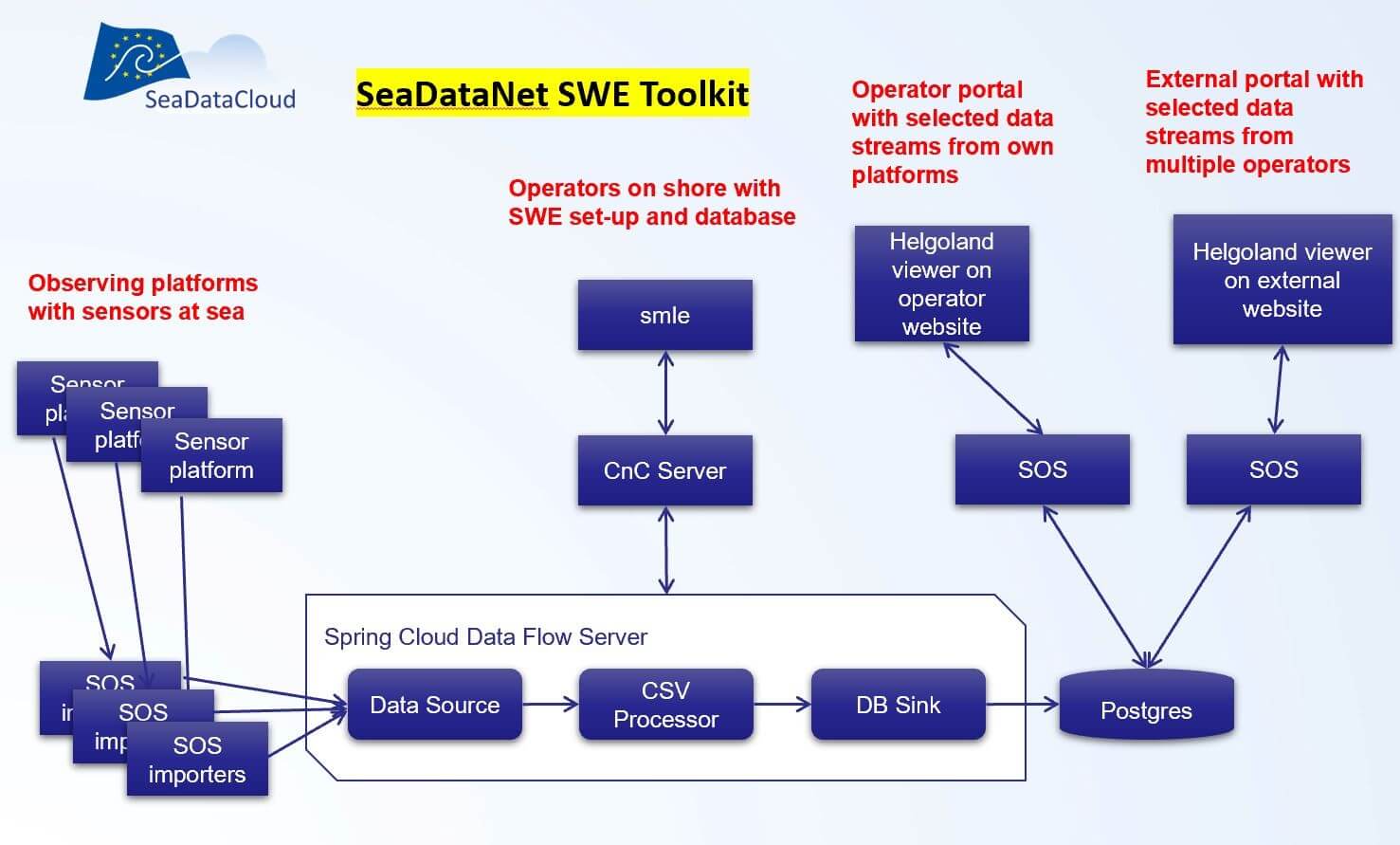

SeaDataNet strives for a common standardised

approach for describing and giving discovery and access to marine data from

different marine disciplines. Next to delayed mode data sets by means of the

CDI data discovery and access service, standardising efforts are also directed

towards (near) real-time data streams as collected by operational sensors and

platforms. For this application, the SeaDataCloud project has built upon these SWE standards to support the interoperable

sharing of (near) real-time observation data streams. A

SeaDataCloud team, led by partner 52°North, has developed the open source SeaDataNet SWE

Toolkit which comprises the following components:

-

SWE Ingestion Service: this component supports sensor operators to receive and ingest marine observation data from platforms and sensors into a local storage database.

From there (selected) data can be published as streams of (near) real-time observation data by means of

SOS servers. As first step

after installing the Ingestion service, the structure of the data stream has to be described

in the local database, specifying platforms and sensors with SWE metadata

profiles, supported by SeaDataNet SWE vocabularies, and using the 52°North SMLE editor;

- SWE Viewing Services: This component, which is based on the

52°North Helgoland Sensor Web Viewer, is an application for exploring and

visualising the data streams as

retrieved through the SOS services. The viewer supports different types of observation data. It is capable

to visualise data measured along trajectories (e.g. by research vessels)

as well as profile data, besides time series data showing the historic

variations of one or more parameters at fixed locations (e.g. fixed buoys

and sensor stations).

Image: Components of SeaDataNet SWE toolkit deployed in a possible

configuration

At the SeaDataNet

portal homepage a link is given to an SWE demonstrator

of the deployed SeaDataNet SWE Toolkit.

Image: Sensor Web Viewer demo at the SeaDataNet home page

All of the

software in the SeaDataNet SWE Toolkit is published as open source software. To

assist uptake by operators of observing platforms, the Demonstrator also

includes a web page where all relevant documentation and

GitHub resources can be found.

SeaDataNet has developed and maintains a set of tools to

be used by each data centre and freely available from the SeaDataNet

portal. It includes documentation and common

software tools for metadata and data, statistical analysis and grid

interpolation and a versatile software package for data analysis, QA-QC and

presentation. As part of the SeaDataCloud project upgrades are undertaken

taking into account new requirements. The following software versions are

current:

MIKADO,

developed by

IFREMER, is used to generate the XML metadata entries for CDI, CSR, EDMED,

EDMERP and EDIOS SeaDataNet catalogues. The latest version (3.6.2) has been

released in May 2020 with major updates: the CDI ISO 19139 format has been updated

to v12.2.0 for INSPIRE compliance and horizontal datum is now mandatory (var03);

CSR ISO 19139 formats are updated to v5.2.1 for INSPIRE compliance. Protocol

changes from http to https for BODC webservices, SeaDataNet schemas, URN

resolver. CDI and CSR publications are now free links and allow to link e.g. by

DOIs.

NEMO,

developed by

IFREMER, enables conversion of ASCII files of vertical profiles, time series or

trajectories to SeaDataNet format files which can be either text files at Ocean

Data View (ODV) and MedAtlas formats or binary files at NetCDF format. The

latest version (1.7.0) has been released in April 2020 with several bug fixes

and including support for additional formats specifically designed for

Biological, Microlitter and Flow cytometry data. Note that NEMO 1.7.0 and further versions generate files

which can only be managed with RM version >= 1.0.45.

EndsAndBends, developed by IFREMER, is used to generate spatial objects

from raw navigation (ship routes). Typical navigation log files record more

than one location / 10 seconds (ex: GPS outputs) and the Size of these

navigation log files are not practical to be managed or visualized using

standard GIS software or services (WMS, WFS and GML). EndsAndBends enables a

sub-setting of the navigation files, keeping the same geographical shape of the

vessel route and reducing significantly the number of geographical locations to

preserve response time. The latest version of EndsAndBends (2.1.0) has been

released on April 2014; since then no new version has been developed.

OCTOPUS,

developed by

IFREMER, is a SeaDataNet format conversion software and also a format checker

for SeaDataNet ODV, netCDF and MedAtlas files. The latest version (1.5.3.0) has

been released in April 2020 with several bug fixes and additional

functionality: Update URLs for CDIs, CSRs and NERC vocabularies; allow DPSF P09

parameter as depth below sea surface in MedAtlas files (e.g. core data); allow

exception for ODV files with empty vertical reference (e.g. navigation,

contaminants in biota files); conversion MGD to ODV: Change the order of the

depth parameter. All data providers are strongly advised to use OCTOPUS to

check their ODV, netCDF and MedAtlas files before initiating submissions in

order to stick carefully to the agreed formats and rules. OCTOPUS includes

already more than 500 checks. When using this version of Octopus, also the

latest MIKADO versions should be used. Files

generated with OCTOPUS 1.5.3 and further

versions are only compatible with Replication Manager >= 1.0.45.

Replication Manager (RM), developed by IFREMER, handles the

replication of the local data sets managed in a SeaDataNet data centre into the

central cloud. The most recent version of the replication manager (1.0.45) has

been released in April 2020 for the connection of all data centres. The

Replication Manager is a web application which allows data providers to submit

new and updated CDI files to the new CDI import manager at MARIS and at the

same time to publish the corresponding unrestricted data files in the cloud for

user downloading. The restricted data files are kept locally, in a specific

directory, for authorised downloading. In the latest release several fixes have

been made for improving the import and the retrieval of restricted data

requests. All data providers are strongly advised to upgrade to this latest

version for their connections to the CDI service and in dialogue with cdi-support@maris.nl.

Ocean

Data View (ODV), developed by

AWI, provides interactive exploration, analysis and visualization of

oceanographic and other geo-referenced profile or sequence data. It is

available for all major computer platforms and currently has more than 70,000 registered users. ODV has a very rich set of interactive

capabilities and supports a very wide range of plot types. This makes ODV ideal

for visual and automated quality control. The latest release is Version 5.3.0,

released in June 2020. The ODV software is also being used for producing generic SeaDataNet data products - see the article

on SeaDataNet data products. Moreover,

an online version webODV has been developed in the framework of the

development of the SeaDataNet Virtual Research Environment (VRE) - see the

separate article on the VRE.

The Data-Interpolating

Variational Analysis (DIVA) tool, developed by ULg, allows to spatially interpolate (or analyse) observations on a regular

grid in an optimal way. As a part of SeaDataNet, the DIVA method has been

integrated into ODV. Features supported by the

ODV/DIVA integration include proper treatment of domain separation due to land

masses and undersea ridges or seamounts and the realistic estimation of water

mass properties on both sides of the divides. This is important in areas, such

as the Kattegat, with many islands separated by narrow channels. An online version of DIVA has been developed in the framework of the

SeaDataNet Virtual Research Environment (VRE) - see the separate article on the

VRE.

All tools are freely made available

for downloading through the SeaDataNet portal together with manuals for

installation, configuration and use.

The

SeaDataNet infrastructure comprises a network of interconnected data centres

that perform marine data management at national and local levels and that

together make their information and data resources discoverable and accessible

in a harmonized way. The SeaDataNet directory

services provide overviews of marine organisations in Europe, and

their engagement in marine research projects, managing large datasets, and data

acquisition by research vessels and monitoring programmes for the European seas

and global oceans:

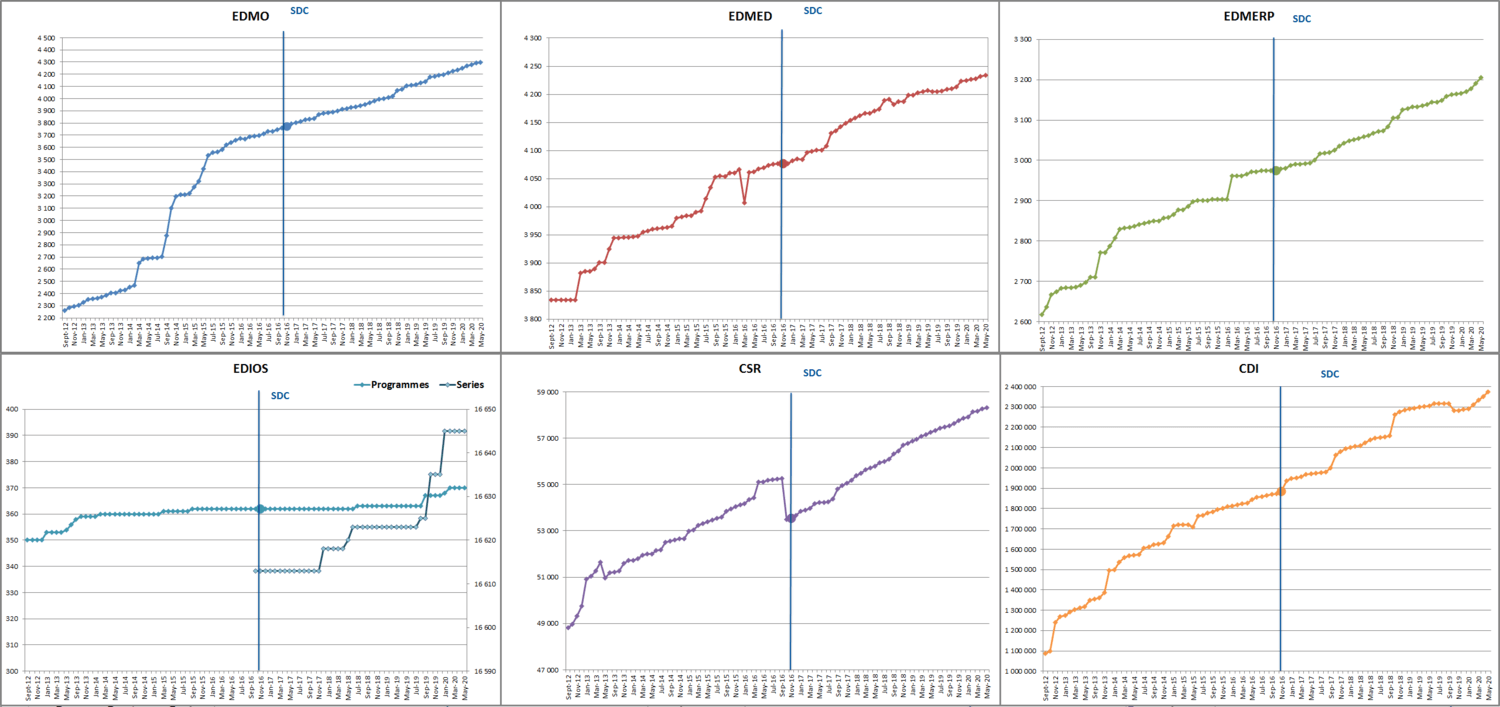

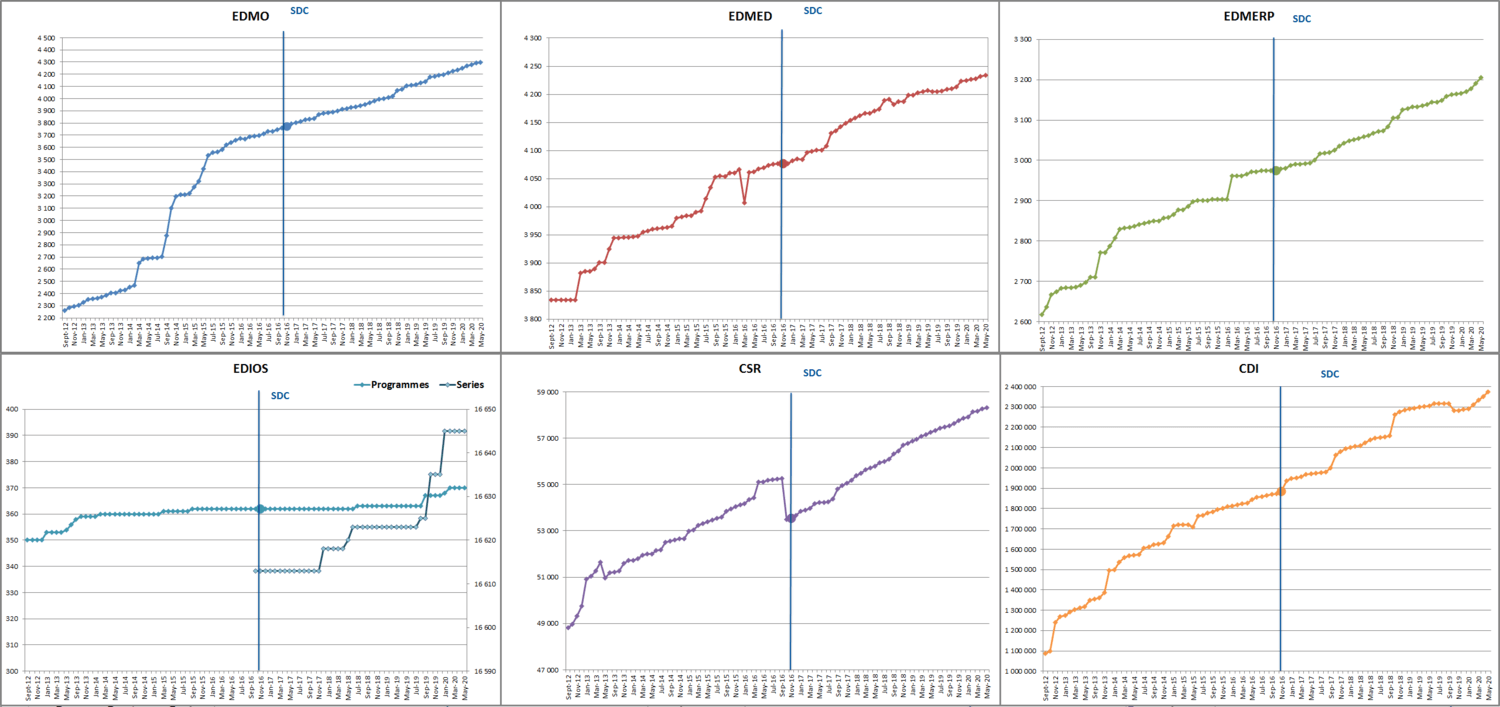

- European

Directory of Marine Organisations (EDMO) (> 4.250 entries)

- European

Directory of Marine Environmental Data (EDMED) (> 4.200 entries)

- European

Directory of Marine Environmental Research Projects (EDMERP) (> 3.200

entries)

- European

Directory of Cruise Summary Reports (CSR) (> 58.300 entries)

- European

Directory of the Ocean Observing Systems (EDIOS) (> 350 programmes

and > 16.500 series entries)

- Common

Data Index Data Discovery and Access service (CDI) (>2.39 million

entries)

Image:

the monthly progress of each of the directories since September 2012.

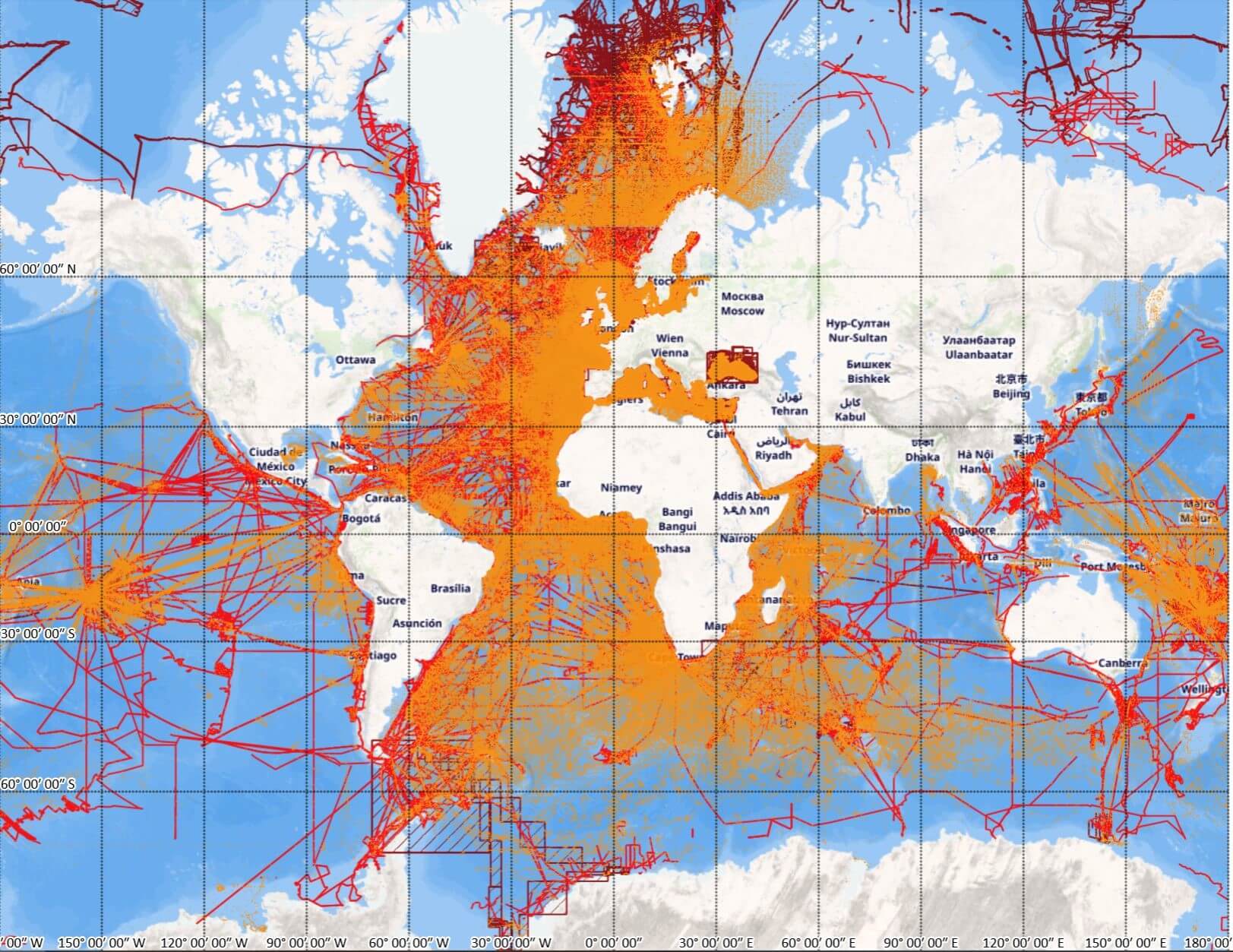

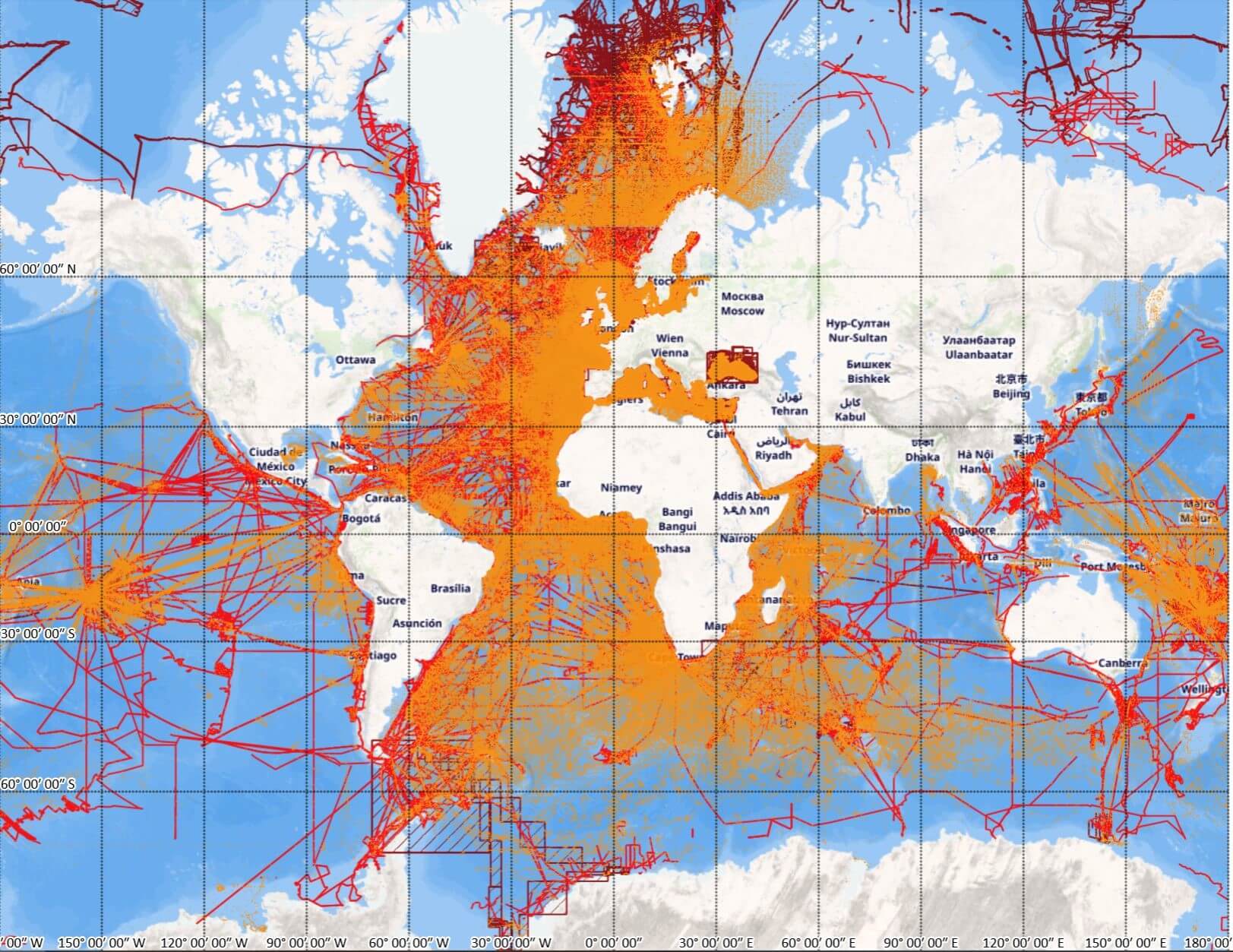

Image:

the coverage of the CDI data service with > 2.39 million entries from 110

data centres and > 750 data originators.

Users can

follow this monthly progress at

the SeaDataNet portal.

This newsletter contains many acronyms which are

described in the following list:

API: Application Programming Interface

AtlantOS:

Optimising and Enhancing the Integrated Atlantic Ocean Observing Systems, an

EU/H2020 Project

CDI:

Common Data Index

CF: Climate and Forecast

CMEMS: Copernicus Marine Environmental Monitoring Service

CSR: Cruise Summary Reports

CSV: Comma Separated Values

CS-W: Catalogue Service for the Web

DIVA: Data-Interpolating Variational Analysis software

DOI: Digital Object Identifier

DTM: Digital Terrain Model

EDIOS: European Directory of Oceanographic Observing Systems

EDMED: European Directory of Marine Environmental Data

EDMERP: European Directory of Marine Environmental Research Projects

EDMO: European Directory of Marine Organisations

EMODnet: European Marine Observation and Data Network

EOSC: European Open Science Cloud

EuroGOOS: European Global Ocean Observing System

FAIR: Findable, Accessible, Interoperable, and Reusable

GEBCO: General Bathymetric Chart of the Oceans

GEOSS: Global Earth Observation System of Systems

GOOS: Global Ocean Observing System

HFR: High Frequency Radar

HPC: High Performance Computing

ICES: International Council for the Exploration of the Sea

ICT: Information and Communication Technologies

IMDIS: International Conference on Marine Data and Information Systems

IOC: Intergovernmental Oceanographic Commission

IODE: International Oceanographic Data and Information Exchange

JCOMM: Joint Technical Commission for Oceanography and Marine Meteorology (now

Joint WMO-IOC Collaborative Board)

ISO: International Organization for Standardization

JSON: Java Script Object Notation

MSFD: Marine Strategy Framework Directive

NetCDF: Network Common Data Form

NFS: National Science Foundation (USA)

NODC: National Oceanographic Data Centre

NRT: Near Real Time

NVS: NERC Vocabulary Services

OBIS: Ocean Biogeographic Information System

ODIP: Ocean Data Interoperability Platform (EU-FP7 and EU-H2020

Project)

ODV:

Ocean Data View software

ODSBP: Ocean Data Standards and Best Practices project

OGC: Open Geospatial Consortium

QA: Quality Assurance

QC: Quality Control

RDA: Research Data Alliance

RSM: Request Status Manager

RTD: Research and Technological Development

SCOR: Scientific

Committee on Oceanic Research

SDB: Satellite Derived Bathymetry

SDC: SeaDataCloud

SDN: SeaDataNet

UNESCO:

United Nations Educational, Scientific and Cultural Organization

URL:

Universal Resource Locator

VRE: Virtual Research Environment

W3C: World Wide Web Consortium

WCS: Web Coverage Service

WebODV: online version of Ocean Data View software

WFS: Web Feature Service

WMO:

World Meteorological Organization

WMS:

Web Map Service

XML: Extensible Markup Language